If you read this blog in the past, you’ll know I view cloud computing as a game changer (Private Clouds are not the Future) and spot instances as a particularly powerful innovation within cloud computing. Over the years, I’ve enumerated many of the advantages of cloud computing over private infrastructure deployments. A particularly powerful cloud computing advantage is driven by noting that when combining a large number of non-correlated workloads, the overall infrastructure utilization is far higher for most workload combinations. This is partly because the reserve capacity to ensure that all workloads are able to support peak workload demands is a tiny fraction of what is required to provide reserve surge capacity for each job individually.

This factor alone is a huge gain but an even bigger gain can be found by noting that all workloads are cyclic and go through sinusoidal capacity peaks and troughs. Some cycles are daily, some weekly, some hourly, and some on different cycles but nearly all workloads exhibit some normal expansion and contraction over time. This capacity pumping is in addition to handling unusual surge requirements or increasing demand discussed above.

To successfully run a workload, sufficient hardware must be provisioned to support the peak capacity requirement for that workload. Cost is driven by peak requirements but monetization is driven by the average. The peak to average ratio gives a view into how efficiently the workload can be hosted. Looking at an extreme, a tax preparation service has to provision enough capacity to support their busiest day and yet, in mid-summer, most of this hardware is largely unused. Tax preparation services have a very high peak to average ratio so, necessarily, utilization in a fleet dedicated to this single workload will be very low.

By hosting many diverse workloads in a cloud, the aggregate peak to average ratio trends towards flat. The overall efficiency to host the aggregate workload will be far higher than any individual workloads on private infrastructure. In effect, the workload capacity peak to trough differences get smaller as the number of combined diverse workloads goes up. Since costs tracks the provisioned capacity required at peak but monetization tracks the capacity actually being used, flattening this out can dramatically improve costs by increasing infrastructure utilization.

This is one of the most important advantages of cloud computing. But, it’s still not as much as can be done. Here’s the problem. Even with very large populations of diverse workloads, there is still some capacity that is only rarely used at peak. And, even in the limit with an infinitely large aggregated workload where the peak to average ratio gets very near flat, there still must be some reserved capacity such that surprise, unexpected capacity increases, new customers, or new applications can be satisfied. We can minimize the pool of rarely used hardware but we can’t eliminate it.

What we have here is yet another cloud computing opportunity. Why not sell the unused reserve capacity on the spot market? This is exactly what AWS is doing with Amazon EC2 Spot Instances. From the Spot Instance detail page:

Spot Instances enable you to bid for unused Amazon EC2 capacity. Instances are charged the Spot Price set by Amazon EC2, which fluctuates periodically depending on the supply of and demand for Spot Instance capacity. To use Spot Instances, you place a Spot Instance request, specifying the instance type, the Availability Zone desired, the number of Spot Instances you want to run, and the maximum price you are willing to pay per instance hour. To determine how that maximum price compares to past Spot Prices, the Spot Price history for the past 90 days is available via the Amazon EC2 API and the AWS Management Console. If your maximum price bid exceeds the current Spot Price, your request is fulfilled and your instances will run until either you choose to terminate them or the Spot Price increases above your maximum price (whichever is sooner).

It’s important to note two points:

1. You will often pay less per hour than your maximum bid price. The Spot Price is adjusted periodically as requests come in and available supply changes. Everyone pays that same Spot Price for that period regardless of whether their maximum bid price was higher. You will never pay more than your maximum bid price per hour.

2. If you’re running Spot Instances and your maximum price no longer exceeds the current Spot Price, your instances will be terminated. This means that you will want to make sure that your workloads and applications are flexible enough to take advantage of this opportunistic capacity. It also means that if it’s important for you to run Spot Instances uninterrupted for a period of time, it’s advisable to submit a higher maximum bid price, especially since you often won’t pay that maximum bid price.

Spot Instances perform exactly like other Amazon EC2 instances while running, and like other Amazon EC2 instances, Spot Instances can be terminated when you no longer need them. If you terminate your instance, you will pay for any partial hour (as you do for On-Demand or Reserved Instances). However, if the Spot Price goes above your maximum price and your instance is terminated by Amazon EC2, you will not be charged for any partial hour of usage.

Spot instances effectively harvest unused infrastructure capacity. The servers, data center space, and network capacity are all sunk costs. Any workload worth more than the marginal costs of power is profitable to run. This is a great deal for customers in because it allows non-urgent workloads to be run at very low cost. Spot Instances are also a great for the cloud provider because it further drives up utilization with the only additional cost being the cost of power consumed by the spot workloads. From Overall Data Center Costs, you’ll recall that the cost of power is a small portion of overall infrastructure expense.

I’m particularly excited about Spot instances because, while customers get incredible value, the feature is also a profitable one to offer. Its perhaps the purest win/win in cloud computing.

Spot Instances only work in a large market with many diverse customers. This is a lesson learned from the public financial markets. Without a broad number of buyers and sellers brought together, the market can’t operate efficiently. Spot requires a large customer base to operate effectively and, as the customer base grows, it continues to gain efficiency with increased scale.

I recently came across a blog posting that ties these ideas together: New CycleCloud HPC Cluster Is a Triple Threat: 30000 cores, $1279/Hour, & Grill monitoring GUI for Chef. What’s described in this blog posting is a mammoth computational cluster assembled in the AWS cloud. The speeds and feeds for the clusters:

· C1.xlarge instances: 3,809

· Cores: 30,472

· Memory: 26.7 TB

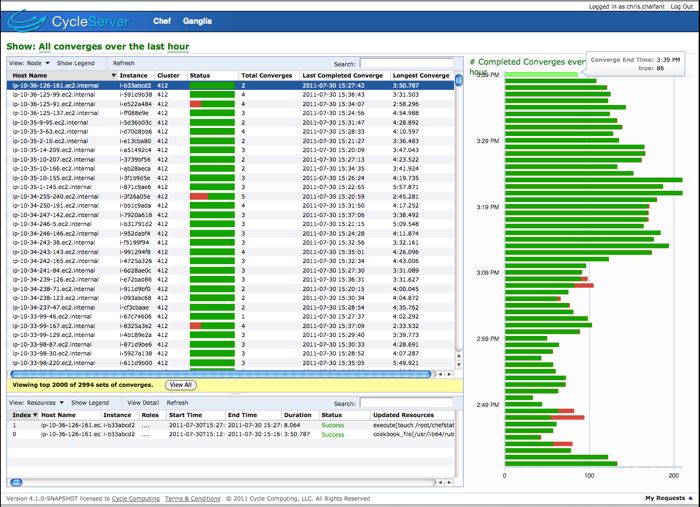

The workload was molecular modeling. The cluster was managed using the Condor job scheduler and deployment was automated using the increasingly popular Opscode Chef. Monitoring was done using a packaged that CycleComputing wrote that provides a nice graphical interface to this large cluster: Grill for CycleServer (very nice).

The cluster came to life without capital planning, there was no wait for hardware arrival, no datacenter space needed to be built or bought, the cluster ran 154,116 condor jobs with 95,078 compute hours of work and, when the project was done, was torn down without a trace.

What is truly eye opening for me in this example is that it’s a 30,000 core cluster for $1,279/hour. The cloud and Spot instances changes everything. $1,279/hour for 30k cores. Amazing.

Thanks to Deepak Singh for sending the CycleServer example my way.

–jrh

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

It may be the case that the market isn’t yet operating at full efficiency or that there may be a price floor currently in place. What we do know with certainty is that spot instances are already a very good value. I’m blown away by the data point in the Cyclecompute article: using spot instances they were able to bring together a 30,000 core cluster for only $1,279/hour.

Spot pricing of cloud computing is, in my view, an amazingly powerful idea. Its such an obvious win for customers and providers that I’m sure it’ll happen in large volume. If any one provider chooses to artificially constrain or limit the market, someone else will do it. Great ideas win, this is a good one and, in its current form at AWS, is substantially reducing the cost of computing.

–jrh

Hi James,

The spot instances are not a market, and certainly not an efficient market, because there is only one vendor there (unlike spotcloud, which is a market).

In our paper, we do not claim that spot instances are always cheaper than the reserved instances or the on-demand instances. Actually, there were several periods in the histories in which spot instances cost _more_ than the respective on-demand instances.

Regarding the artificial ceiling – it is not a ceiling for all spot prices, but rather for the artificial element – the reserve price (not to be confused with reserved instance), which is the hidden minimal price which Amazon is willing to accept at any given time. So the floor and ceiling is the range in which the minimal secret price moves.

Regarding stabilization – spot instances are already at stable prices, until Amazon decide otherwise. In his CCGRID’11 paper, "Debunking Real-Time Pricing in Cloud Computing", Sewook Wee analyzes the same EC2 price traces we analyzed, and shows that the trend is exactly zero.

Greg – I agree with you regarding the excessive supply. This is why 98% of the time, the prices we see are the artificial prices (the hidden minimal prices).

Orna

Your argument makes sense to me Greg. I’ll dig deeper.

–jrh

Thanks, James, I was assuming that, at the moment, regardless of price, supply (idle instances) vastly exceeds demand (people wanting spot instances). That is, Amazon has 100k+ idle servers at non-peak times and, at any price, the combined requests for spot instances is nowhere near 100k yet just because there aren’t that many people on Amazon spot instances yet waiting around with big jobs ready to go. So, if supply vastly exceeds demand, I’d think prices should drop to the minimum Amazon is willing to accept, which should be a lot lower than 50% of the normal pricing. Maybe I’ve underestimated demand?

On spot price fluctuations Greg, its a market and I would expect the market would be working fairly well already and it would be getting more efficient as it continues to grow. Its hard to know where the price "should" be but I agree with your assessment that it certainly could be low and still be a win for both the buyers and sellers. Where it ends up should just be supply and demand finding the right price for that period. The estimates I’m seeing have the spot price typically in the 50% to 66% range of the on demand pricing. I’m not sure where the market would stabilize at scale. Your thinking on these things tends to be clear — what’s your thinking on where the market will stabilize as a percentage of on demand pricing?

–jrh

Hi, James. This is cheap, but shouldn’t it be even cheaper? Shouldn’t the cost of spot instances be marginally above the variable cost to Amazon, that is the additional cost of a loaded server over an unloaded server, and so near the price of the additional electricity consumed for a loaded server versus idle server? Shouldn’t spot instances be very, very cheap, at least until demand for this idle capacity exceeds supply and the price gets bid up (which should not be the case yet)?

The paper analyzes AWS spot pricing and concludes that there is a floor below which instances will not be sold and ceiling beyond which they will not be sold. And, within this range of "allowable" spot prices, one component of the pricing are the expand supply and demand market dynamics but another component is random. Essentially it reports that spot pricing is always cheaper than reserve instances and speculates that there may be a random component in the pricing.

The researchers report that spot is always priced lower than on-demand instances but say there is no assurance that the market will track supply and demand and the price isn’t allow unbounded swings upward or downward. I can’t come up with a reason why a ceiling in the allowed price would make sense for any participant in the market. If customers want to bid more than than on-demand, it would seem to be crazy not to allow them to pay more. There are few conditions where this might make sense but it doesn’t surprise me that it never happened in the data the researchers were studying. On the other side, there actually is a price floor below which it does not make sense to offer the instance in the spot market. A sensible floor would be the marginal cost of power. Renting an instance for less than the cost of the power would lose money so a floor might make sense although I’m not sure I would bother to implement the floor if I were running the market.

I can’t come up with any circumstances when adding a random element to the pricing would make sense or in any way improve the market. It seems unlikely that this would have been implemented by the Spot Instance team.

In thinking through market dynamics and costs, I understand why there might be a price floor but can’t see any value in there being a ceiling price nor a random element in pricing model. I can’t see the financial upside to manipulate the market in this matter so I doubt it is happening. We’re speculating on those factors but where is broad agreement in the paper and with the user’s I’ve spoken with is the spot market offers excellent value. Its one of my favorite AWS features and I love the Cyclecopute example above: 30,000 cores for $1,279/hour.

Thanks for pointing out the paper Ian.

Hi James,

Concerning spot pricing, would you be interested in addressing the findings of this paper, showing that spot prices are not completely market driven as often assumed?