IEEE Spectrum recently published a special report titled 50 Years of Moore’s Law. Spectrum, unlike many purely academic publications, covers a broad set of topics in a way accessible to someone working outside the domain but not so watered down as to become uninteresting. As I read through this set of articles on Moore’s law I’m thinking I should blog more of what Spectrum publishes. It’s really an excellent publication.

Here are some examples of blog postings I’ve done in the past based upon Spectrum coverage:

- Randy Katz on High Scale Data Centers

- Massive Multiplayer Game Automation

- Observations on Errors, Corrections, & Trust of Dependent Systems

- Why Renewable Energy (Alone) Won’t Fully Solve the Problem

- What Went Wrong at Fukushima Dai-1

Moore’s law is the fundamental base upon which the semiconductor revolution has been based. It created the era of the personal computer, it made the mobile revolution possible, and it’s enabling the internet of things, and it’s the bedrock behind the incredible speed of innovation in the world of cloud computing. It’s a rare consumer device that doesn’t include some semiconductor components. Moore’s legacy surrounds us everywhere.

Moore’s law preceded the first microprocessor but it’s the increasing capability and/or falling price of microprocessors that really showcases the impact of Moore’s law in the most obvious way. When the world’s first microprocessor was announced in 1971, I was in grade 6 listening to Who’s Next. I figured I was pretty plugged in but hadn’t a clue that microprocessors, and semiconductors in general, driven by Moore’s law, would be the basis for most of my future employment and it remains so today.

For each of the 8 articles in the series, I link to them below and offer a quick summary or excerpts from the article:

Special Report: 50 Years Of Moore’s Law:

Fifty years ago this month, Gordon Moore forecast a bright future for electronics. His ideas were later distilled into a single organizing principle—Moore’s Law—that has driven technology forward at a staggering clip.

The Multiple Lives of Moore’s Law:

A half century ago, a young engineer named Gordon E. Moore took a look at his fledgling industry and predicted big things to come in the decade ahead. In a four-page article in the trade magazine Electronics, he foresaw a future with home computers, mobile phones, and automatic control systems for cars. I would argue that nothing about Moore’s Law was inevitable. Instead, it’s a testament to hard work, human ingenuity, and the incentives of a free market. Moore opened his 1965 Electronics Magazine paper with a bold statement: “The future of integrated electronics is the future of electronics itself.” That claim seems self-evident today, but at the time it was controversial.

In his 1965 paper, as evidence of the integrated circuit’s bright future, he plotted five points over time, beginning with Fairchild’s first planar transistor and followed by a series of the company’s integrated circuit offerings. He used a semilogarithmic plot, in which one axis is logarithmic and the other linear and an exponential function will appear as a straight line. The line he drew through the points was indeed more or less straight, with a slope that corresponded to a doubling of the number of components on an integrated circuit every year.

From this small trend line, he made a daring extrapolation: This doubling would continue for 10 years. By 1975, he predicted, we’d see the number of components on an integrated circuit go from about 64 to 65,000. He got it very nearly right.

In an analysis for the 1975 IEEE International Electron Devices Meeting, Moore started by tackling the question of how the doubling of components actually happened. He argued that three factors contributed to the trend: decreasing component size, increasing chip area, and “device cleverness,” which referred to how much engineers could reduce the unused area between transistors.

Eventually even microprocessors stopped scaling up as fast as manufacturing technology would permit. Manufacturing now allows us to economically place more than 10 billion transistors on a logic chip. But only a few of today’s chips come anywhere close to that total, in large part because our chip designs generally haven’t been able to keep up.

For the last decade or so, Moore’s Law has been more about cost than performance; we make transistors smaller in order to make them cheaper. That isn’t to say that today’s microprocessors are no better than those of 5 or 10 years ago. There have been design improvements. But much of the performance gains have come from the integration of multiple cores enabled by cheaper transistors.

Going forward, innovations in semiconductors will continue, but they won’t systematically lower transistor costs. Instead, progress will be defined by new forms of integration: gathering together disparate capabilities on a single chip to lower the system cost.

The Death of Moore’s Law Will Spur Innovation: The author asserts first that Moore’s law is slowing and then goes on to look at what might follow from this observation. Starting with the slowing:

Lots of analysts and commentators have warned recently that the era of exponential gains in microelectronics is coming to an end. But I do not really need to hang my argument on their forecasts. The reduction in size of electronic components, transistors in particular, has indisputably brought with it an increase in leakage currents and waste heat, which in turn has slowed the steady progression in digital clock speeds in recent years. Consider, for example, the clock speeds of the various Intel CPUs at the time of their introduction. After making dramatic gains, those speeds essentially stopped increasing about a decade ago.

Since then, CPU makers have been using multicore technology to boost performance, despite the difficulty of implementing such a strategy. [See “The Trouble With Multicore,” by David Patterson, IEEE Spectrum, July 2010.] But engineers didn’t have much of a choice: Key physical limits prevented clock speeds from getting any faster, so the only way to use the increasing number of transistors Moore’s Law made available was to create more cores.

Transistor density continues to increase exponentially, as Moore predicted, but the rate is decelerating. In 1990, transistor counts were doubling every 18 months; today, that happens every 24 months or more. Soon, transistor density improvements will slow to a pace of 36 months per generation, and eventually they will reach an effective standstill.

The author goes on to argue that the slowing of Moore’s law will enable: 1) longer hardware design lifetimes which allow lower volume devices to succeed economically and allow smaller companies to succeed in the hardware market, 2) support a standardization of hardware platforms, 3) FPGAs will perform “well enough” to replace hard wired CPUs for many tasks, and 4) an emergence of an open source hardware ecosystem. I personally see the latter happening as well but argue it is going to happen because of Moore’s law and the device innovation it allows rather than the slowing of Moore’s law. I also see FPGAs replacing hot software kernels because hardware is so much more power and cost efficient than pure software on general purpose processors (for repetitive tasks that don’t change). Again, I think this will happen because of Moore’s law rather than because it is fading. On the first two predictions (longer design lifetimes and the emergence of greater standardization) I’m not convinced. I see a continuing, if not accelerating, pace of hardware innovation looking forward.

Moore’s Law Might be Slowing Down, but not Energy Efficiency: The authors Jonathan Koomey and Samuel Naffziger argue that Moore’s law may be slowing down but gains in work done per watt will continue: “Here’s what we do know. The last 15 years have seen a big falloff in how much performance improves with each new generation of cutting-edge chips. So is the end nigh? Not exactly, because even though the fundamental physics is working against us, it appears we’ll have a reprieve when it comes to energy efficiency.”

Encouragingly, typical-use efficiency seems to be going strong, based on tests performed since 2008 on Advanced Micro Devices’ chip line. Through 2020, by our calculations for an AMD initiative, typical-use efficiency will double every 1.5 years or so, putting it back to the same rate seen during the heyday of Moore’s Law. These gains come from aggressive improvements to circuit design, component integration, and software, as well as power-management schemes that put unused circuits into low-power states whenever possible. The integration of specialized accelerators, such as graphics processing units and signal processors that can perform certain computations more efficiently, has also helped keep average power consumption down.

Overall, an interesting article and one I agree with. I occasionally see analysis that over-plays the cost of power but there is no doubt these improvements are important both economically and environmentally.

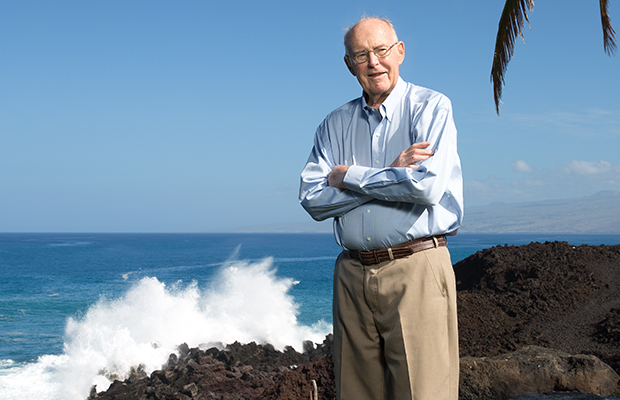

Gordon Moore: The Man Whose Name Means Progress: A 2015 interview with Gordon Moore by Rachel Courtland.

Moore’s Curse: Unjustified Technical Expectations: The article argues that Moore’s law brings expectations sometimes out of line with reality: The Author says “the [Moore’s Law] revolution has been both a blessing and a curse, for it has had the unintended effect of raising expectations for technical progress. We are assured that rapid progress will soon bring self-driving electric cars, hypersonic airplanes, individually tailored cancer cures, and instant three-dimensional printing of hearts and kidneys. We are even told it will pave the world’s transition from fossil fuels to renewable energies. But the doubling time for transistor density is no guide to technical progress generally. Modern life depends on many processes that improve rather slowly, not least the production of food and energy and the transportation of people and goods.”

I largely agree and I see references to Moore’s law doubling around all aspects of computing when, sadly, it’s simply not the case. We all expect to see a Moore’s law pace on cloud computing price drops. And, even though this simply can’t happen over the long haul, ironically, it has so far. The problem is many aspects of a modern data center are not on a Moore’s improvement pace. Power distribution and cooling are a material percentage of the cost of cloud computing resources and this equipment show very little Moore’s law tendency.

The obvious answer is to try to eliminate any of these components not on a Moore’s law pace and the industry is doing some of that in data center cooling systems. Another approach is to get the component onto a Moore’s law curve. Modern switch gear is still mostly composed of large mechanical components but at least they are now all microprocessor controlled. They still aren’t on a Moore’s law curve but the control systems are now measuring more conditions faster, have better data gathering and telemetry, and are responding to change more quickly. There is a hint of Moore around these components. But, generally, much of computing when you look at the overall costs of the entire hardware, software and administrative stack is not on a Moore’s law curve. What’s interesting to me is there is enough innovation going on in the cloud computing industry that, even though it’s provably not entirely Moore driven, the industry is delivery a near Moore’s law pace of price reductions.

Transistor Production has Reached Astronomical Scales: A graphic with a short text that really drives home the point in a powerful way: “In 2014, semiconductor production facilities made some 250 billion billion (250 x 1018) transistors. This was, literally, production on an astronomical scale. Every second of that year, on average, 8 trillion transistors were produced. That figure is about 25 times the number of stars in the Milky Way and some 75 times the number of galaxies in the known universe.”

The full articles referenced above:

- Articles from the IEEE Spectrum 50 years of Moore’s Law special report

- Special Report: 50 years of Moore’s Law

- The Multiple Lives of Moore’s Law

- The Death of Moore’s Law Will Spur Innovation

- Moore’s Law Might be Slowing Down, but not Energy Efficiency

- Gordon Moore: The Man Whose Name Means Progress

- Moore’s Curse: Unjustified Technical Expectations

- Transistor Production has Reached Astronomical Scales

Not directly related but worth reading nonetheless is the Patterson article mentioned in the first section above: The Trouble with Multicore.

I worry what will happen next after 1nm is reached for production chips. But then again, capitalism is key. People always succeed in finding way on how to push the envelope, given money as incentive.

Yup, you nailed it. The “end” is always near in our industry and yet we somehow keep finding ways to push the limits further into the future. Sometimes the advancements come slower, often they get more expensive, and occasionally major innovation is required but these forecasts of hard physical limits in the near future that can’t be circumvented or innovated around are typically off the mark.

I would expect resonant patterns to emerge and this to be clear in the thermals. Not a problem in 5 years but what about 20 years? There’s an active push to separate application state and processing in cloud workloads. Processing demand becomes “more mobile” and can be moved from “hot” to “cold” spots in the datacenter without interruption. There’s a catch though. Network capex rises relative to compute and memory capex. Opex rises relative to compute density (cooling no longer an incremental challenge). All highly speculative but extremely fun to think about.

You could be correct in your prediction that in a few years that cooling costs will start rising non-linearly to other costs in a few years. But, for the last 5 years, coolinig costs have been falling fast relative to the cost of servers, power distribution, and power due to innovations in mechanical systems, hot aisle isolation, increased use of air side economization, and better server designs. But, eventually cooling costs will start climbing again relative to other costs as density continues to climb. I expect that the industry will eventually move back to direct liquid cooling and its fairly likely that this will cost more than current mechanical designs.

I also agree that workloads will become more mobile and heat based placement may eventually impact workload placement. However, since cooling is less expensive than the other resources in the datacenter, you never would want to under-utilize server resources to save cooling. Consequently, there is enough cooling to run all the servers in any well designed system.

Knowledable operators can over-subscribe power and cooling based upon workload expereince but you would never want to be unable to deploy a workload when you had a server available due to cooling restrictions. The goal in any well engineered system to is to be constrained by the most valuable resource (servers).

It may be possible to cool more efficiently when less than all the servers are utilized by spreading workloads out more. It’s an idea worth thinking through further. Thanks for the comment David.

“Moore-Andreesen Disruptive Creation Loop” – Doesn’t it all just boil down to “the market” for electronics? Supply simultaneously predicting, influencing and matching demand every 18 months? Moore’s quota rippling through an entire industry?

What’s a practical rate of expansion from here? Whose signature is on the quota? Will it lead an entire industry forward? Science, design, manufacturing and engineering is slowly turning to supply (demand) the “macro-electronic machine” and “the industry is delivery a near Moore’s law pace of price reductions.” The interpretation of Moore’s law I prefer is much simpler: Keep up the good work!

I agree with you David. Each industry that starts depending upon semiconductors and software imediately starts seeing far faster rates of innovation and both better products and lower pricing. It’s a wonderful thing and its sweeping an ever widening swath of industries. Appliances, cars, cameras, mobile devices, and all forms of sensors and actuators.

…as long as supply isn’t interrupted in each cycle. Kind of makes my head spin but: seems to all come down to the thermal characteristics of the datacenter. What does a “thermal mapping” (visual aid: http://scale.engin.brown.edu/tools/) of a datacenter look like? How is this changing? Is there an efficient self-organization? Or, is an even uglier “memory wall” (network wall) emerging? Would be interesting to see re:Invent presentation begin with: “AWS has 2^x thermal couplers and here’s what the data is telling us…”

Thermals aren’t a big concern and don’t look like are going to represent an absolutely barrier to progress in the near future. The industry mostly still runs using air cooling mostly because it’s easy and it works when going after an easy cooling problem. I expect direct liquid cooling will return as the thermal problem gets more difficult and that is happening today in exteme cases. 3d die stacking will yeild much more challenging thermal problems but, at least for me, I don’t see any fundamental barriers over the next 5 years. Cooling will just keep getting incremenetally more difficult and the industry will keep solving the problem.

What a thought provoking post. Thank you. You may find this interesting: http://arunsplace.com/2014/09/22/the-moore-andreessen-feedback-loop/.

Thanks for the pointer to the “Moore-Adreesen Disruptive Creatoion Loop” article David.