.jpg)

In The Case For Low-Power Servers I reviewed the Cooperative, Expendable, Micro-slice Servers project. CEMS is a project I had been doing in my spare time in investigating using low-power, low costs servers running internet-scale workloads. The core premise of the CEMS project: 1) servers are out-of-balance, 2) client and embedded volumes, and 3) performance is the wrong metric.

Out-of-Balance Servers: The key point is that CPU bandwidth is increasing far faster than memory bandwidth (see page 7 of Internet-Scale Service Efficiency). CPU performance continues to improve at roughly historic rates. Core count increases have replaced the previous reliance on frequency increase but performance improvements continue unabated. As a consequence, CPU performance is outstripping memory bandwidth with the result that more and more cycles are spent in pipeline stalls. There are two broad approaches to this problem: 1) improve the memory subsystem, and 2) reduce CPU performance. The former drives up design cost and consumes more power. The later is a counter-intuitive approach. Just run the CPU slower.

The CEMS project investigates using low-cost, low-power client and embedded CPUs to produce better price-performing servers. The core observation is that internet-scale workloads are partitioned over 10s to 1000s of servers. Running more slightly slower servers is an option if it produces better price performance. Raw, single-server performance is neither needed nor the most cost effective goal

Client and Embedded Volumes: It’s always been a reality of the server world that volumes are relatively low. Clients and embedded devices are sold at an over 10^9 annual clip. Volume drives down costs. Servers leveraging client and embedded volumes can be MUCH less expensive and still support the workload.

Performance is the wrong metric: Most servers are sold on the basis of performance but I’ve long argued that single dimensional metrics like raw performance are the wrong measure. What we need to optimize for is work done per dollar and work done per joule (a watt-second). In a partitioned workload running over many servers, we shouldn’t care about or optimize for single server performance. What’s relevant is work done/$ and work done/joule. The CEMS projects investigates optimizing for these metrics rather than raw performance.

Using work done/$ and work done/joule as the optimization point, we tested a $500/slice server design on a high-scale production workload and found nearly 4x improvement over the current production hardware.

.jpg)

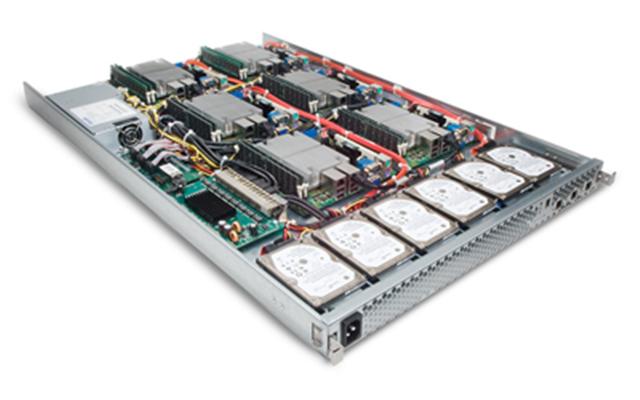

Earlier this week Rackable Systems announced Microslice Architecture and Products. These servers come in at $500/slice and optimize for work done/$ and work done/joule. I particularly like this design in that its using client/embedded CPUS but includes full ECC memory and the price/performance is excellent. These servers will run partitionable workloads like web-serving extremely cost effectively.

–jrh

James Hamilton, Amazon Web Services

1200, 12th Ave. S., Seattle, WA, 98144

W:+1(425)703-9972 | C:+1(206)910-4692 | H:+1(206)201-1859 | james@amazon.com

H:mvdirona.com | W:mvdirona.com/jrh/work | blog:http://perspectives.mvdirona.com

Got it Zach. Yes, there is no question that pushing scheduling decisions higher up the software stack will allow better scheduling decisions with the slight cost of additional complexity.

Thanks,

–jrh

jrh@mvdirona.com

I agree completely about making the wattage efficient for work, and my point with the paper is more generally related to your metrics: how could software adopt your metrics of work/$ and work/jould? In the paper they make the scheduler temp aware, but why not work/$ aware? Could a scheduler know the work/$ ratio for every machine in the DC (assuming that it is heterogenous due to upgrades, varying needs etc) and schedule so as to maximize the way that machines are used for handling requests? Essentially, what is possible if we push the concept of efficiency (again, work/$ + work/joule) up through the hardware into the software layers (OS, App, etc) and would it be value added?

Thanks for the paper Zach. Temp aware scheduling seems like a good way to help make existing data centers work better. It’s a good practical approach. But, given that we know we need to every watt we bring into the building needs to be taken back out. I would rather seen mechanical systems matched to the power distribution system and then optimize to use the resources I purchased as fully as possible.

–jrh

james@amazon.com

dear james or jamina,

Get a haircut or a police officer might pull you over lookin for a little sumthin sumthin

Here is the thermally-aware scheduling reference I was talking about. It’s from USENIX ’05.

HTML version: http://www.usenix.org/events/usenix05/tech/general/full_papers/moore/moore_html/

PDF version: http://www.cs.duke.edu/~justin/papers/usenix05cool.pdf

-Zach

I couldn’t agree more Zach. Small grained failures are much better than big ones. I would much rather be failing (or have fail) a 10 disk server than a 48.

I’m super interested in Atom as well but, so far, all Atom boards are small memory and without ECC. The lack of ECC is a problem in my view.

If you still have the thermally aware scheduler reference, please send it my way. I’m interested.

–jrh

Great work James. Another nice benefit of more lower-power servers is small failure granularity. A single server failure has smaller impact on overall performance, although this of course depends on how components (HD) are shared among servers as well. I look forward to reading about experiences with Atom and SSD as both sound promising, particularly for I/O bound tiers.

There also seems to be promise in extending these principles into the software stack as well. I’ve seen work done on thermally-aware schedulers, but haven’t run across anything that integrates you metrics into workload distribution systems.

-Zach