Optical Archive Inc. was a startup founded by Frank Frankovsky and Gio Coglitore. I first met Frank many years ago when he was Director of the Dell Data Center Solutions team. DCS was part of the massive Dell Computer company but they still ran like a startup. And, with Jimmy Pike leading many of their designs, they produced some very innovative hardware systems. I met Gio quite a bit earlier. He was a founder of Rackable Systems and, in the early days, they were by far my favorite hardware design team. One phone call to Gio and new systems designs would start showing up at my office the following week. The world has changed dramatically since that time. Rackable became Silicon Graphics and the Dell company is focusing on other markets. The mega data center operators are now mostly doing their own compute and storage server designs working directly with ODMs to have them built and Gio and Frank have both long since moved on as well.

In their latest project, Gio and Frank have targeted the massive cold storage market. The archive industry conventional wisdom is that cold storage at scale can only be done cost effectively on tape. I’ve argued for years that the hard disk drive manufacturers could and should win this market. But, to deliver on this opportunity, each would need to spend $200m to $500m on custom disk designs uniquely targeting cold storage. Disk drive manufacturers have gotten ever more efficient over the years and they know the best way to control their R&D costs is to use the same hardware platform across their product line. Different disks targeting very different market segments will share many and sometimes all components but the firmware. Sharing the hardware platform broadly allows disk drive manufacturers to maintain reasonable margins by amortizing R&D across very large volumes.

But, leveraging an existing disk design will not produce a winning product for archival storage. Using disk would require a much larger, slower, more power efficient and less expensive hardware platform. It really would need to be different from current generation disk drives. Designing a new platform for the archive market just doesn’t feel comfortable for disk manufacturers and, as a consequence, although the hard drive industry could have easily won the archive market, they have left most of the market for other storage technologies.

Disk manufacturers will continue to incrementally produce better archive platforms, larger form factors and higher platter counts will eventually come out, but tape will incrementally get better as well and I don’t see disk as likely to be a good choice for a high scale archive operator in the foreseeable future. This makes it sound like tape dominance at the top of the archive market is assured but I actually think that new technologies have a very good chance of offering step function cost improvements while tape continues to grind along improving relatively slowly.

One archive storage technology that has caught my interest is optical. More than a decade back, Optical was expected to be big but the client driven R&D investment levels didn’t bring the needed higher densities fast enough and the technology has been largely relegated to television production, entertainment, and related vertical markets.

Gio and Frank founded Optical Archive to exploit is the combination of increasingly fast optical storage technology improvements coupled with advanced, low cost robotics to allow the production of very high density storage solutions, with lifetimes measured in decades, at low power consumption, and at overall cost of storage costs that can compete very well against tape. They also felt that optical would scale down better without giving up the cost advantage. The feeling was you shouldn’t have to be Large Hadron Collider to be able to achieve the needed scale for low cost archival storage.

Sony, as a supplier to OAI, was working closely with them during the early days of the company and May 27th last year, they acquired Optical Archive incorporated. Today we get to see the fruits of the last several years and it’s a fairly impressive system.

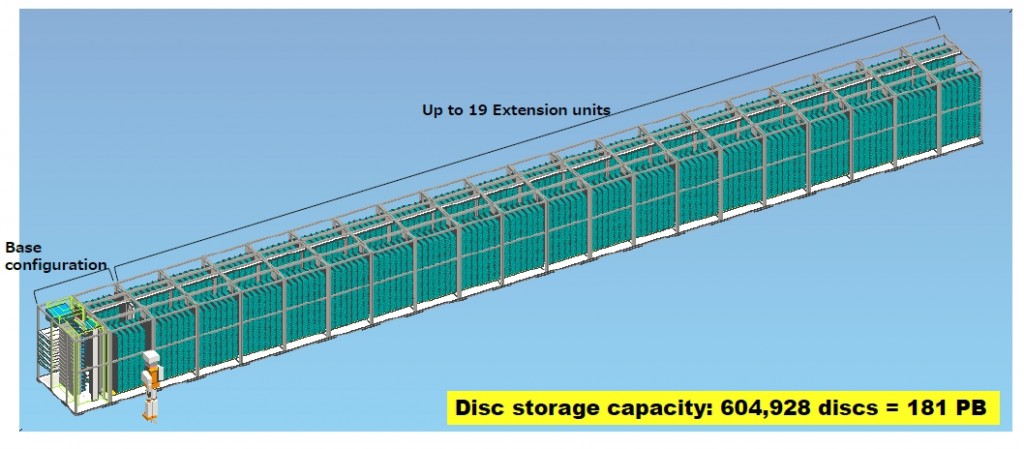

This system is a fully expanded version of Everspan soon to be available from Sony. It is a massive 181 petabytes on 604,928 optical disks. The optical disk density road map looks good and expect that this system will have double the storage capacity as new optical technology from Sony and Panasonic comes online over the next 2 to 3 years.

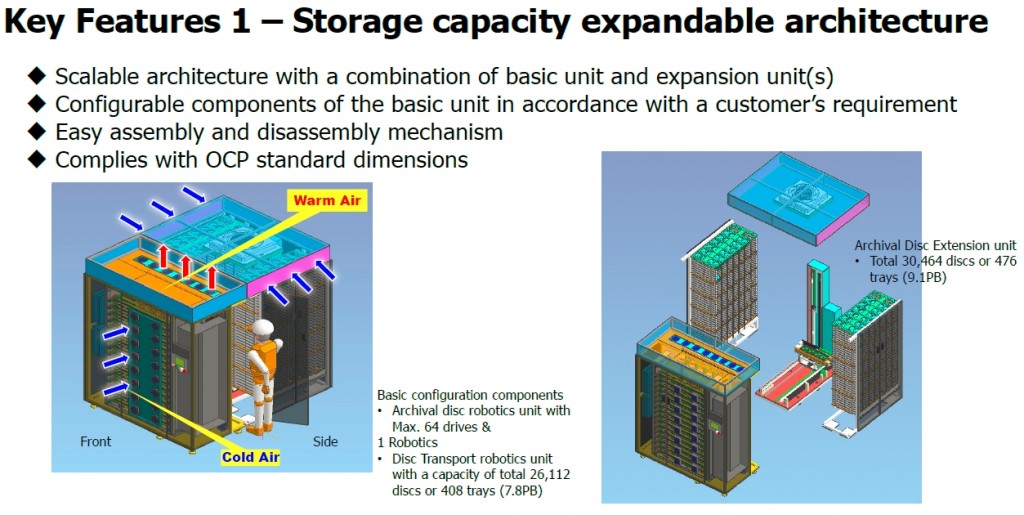

The minimum configuration is 144” long, 95” high, and 74” across and includes 16 optical drives and up to 39,168 disks. Without footprint change, the base system can be configured with up to 64 optical drives. The base configuration is roughly 12’ long, 8’ high, and 6’ across and the design point is to take roughly the space of two rows of servers and then, as capacity is required, it can be extended out by up to 14 expansion units each of which holds 43,520 disks. The fully configured system is a pair of data center rows running out to just under 123’ housing 181 petabytes housed on 604,928 optical disks. The system will be generally available in July 2016.

I’ve always said it’s never been affordable to throw data away and the Everspan goal is to make it less expensive to do the right thing. Overall, it’s good to see new storage technologies continue to come to the massive archival market. Competition drives innovation and accelerates cost reductions.

Nice article. Two questions:

(1) Where a researcher should look for opportunities to talk to /listen to the practitioners? Some possibilities are industry tracks at good conferences (e.g. Sigcomm) or informal talk during conferences, occasional distinguished lectures at universities, doing an internship at a right place. Are there other ways to listen to what are industry problems?

(2) Why industry doesn’t keep a public-ally accessible list of problems which are important to them and they would like to be solved in a good way? Why good researcher have to go out and actually find the right problem the hard way? Shouldn’t it be the thing that hard work be required to come up with the right solution?

Thanks.

Two unusually insightful questions. In my opinion, one of the most important attributes of good research is working on the right problems. Getting access to industry problems is a great start to producing relevant research that makes a difference. David Patterson and his collaborators at Cal Berkeley is a great example. They have produced amazing results over the years including RISC computing, RAID storage, and Apache Spark. Every 6 months, they do offsite meetings where industry leaders are invited to speak, contribute, and comment on the research the team has underway. Dave Patterson recently retired but the approach lives at Berkeley and elsewhere. Try to get an invite to an AMP Lab retreat (https://amplab.cs.berkeley.edu) or ask to attend a retreat from a Berkeley Alum like Garth Gibson at CMU (http://www.cs.cmu.edu/~garth/). UCSD and many others have similar meetings where industry is invited to review the research work. In my opinion, the Berkeley approach is an excellent one to get a research team exposed to interesting problems.

Another approach is to do in an internship at an industrial research operation like Microsoft Research (https://www.microsoft.com/en-us/research/). The Microsoft research team is led by Harry Shum — an internship there is another way to see interesting, industry-relevant problems.

Finally you asked why industry doesn’t post a set set of problems they would like researched. Many reasons some of which are: 1) industry players are competitors and don’t always want to tell others where the most interesting problems lie, and 2) industry players have very different perspectives, different backgrounds, and sometimes quite different customers and so may have different perspectives on where the interesting problems lie.

A good tactic is to get to know some players from industry and even consider inviting some industry leaders in to review and comment on the research work you are doing. Everyone is busy so this only works if it’s boot strapped. The work has to be interesting, the faculty members strong, and the students good to attract industry participation. But, once achieved, it’s a powerful approach and one I would strongly recommend. An internship at a research organization that has a vibrant community of industry contributors is a great approach to get access to hard, industry-relevant problems.