Tesla hosted Autonomy Day for Analyst on Monday April 22nd beginning at 11am. The video is available at https://www.youtube.com/watch?v=Ucp0TTmvqOE. It’s a bit unusual for a corporate video in that it is 3 hours and 52 minutes long but it’s also unusual in that there is far more real content in there than is typically shown in commercial systems. It starts with 1 hour and 9 min of nice but quickly repetitive pictures of Tesla’s. It’s worth watching for three or four minutes but the interesting material starts with Pete Bannon on the inference ASIC that they call the Full Self Driving (FSD) chip. That segment runs between 1:12 and 1:35 with questions running to 1:51. There isn’t really anything before that. Pete covers a lot and offers excellent detail. It’s quite good and worth watching.

When looking at inference chips, everyone does large multiply adder arrays so that’s more or less expected. What’s interesting is what’s around the multiple/addition unit. What else did they spend the chip real estate on after the multiply addition unit and I/O?

Generally what catches my interest when looking at Inference ASICs is memory bandwidth and their approach to managing memory bandwidth. The options are to either spend a lot and have all the bandwidth you need or to get creative and have to work very hard to keep the memory subsystem out of the way. The most common version of the first approach is to use HBM (High Bandwidth Memory) which is many ways is the right long term answer. But, HBM is currently expensive and will stay expensive until there are at least 2 and probably 3 sources with very high volume and there isn’t a shortage of manufacturing capacity. We’re quite a ways away from that at this point.

Tesla took the second approach to memory bandwidth and chose to use conventional and inexpensive LPDDR4 (Low Power Double Data Rate Synchronous Dynamic Random Access Memory) . What I found most interesting is what they did to achieve the performance they needed with a relatively low performance, low-cost, and low-power memory subsystem:

- LPDDR4 128B@4,266Gbps

- Backed by 32MB SRAM to reduce memory access rate

- Pipelined parallelism and with DMA loading of as much as possible before needed to get more from a low cost memory subsystem

- End-to-end CRC to detect and correct bit errors in memory, caching, or transport

Nice work from my perspective. Other attributes of interest:

- Goal to stay below 100W while delivering at least 50TOPS in same form factor with same cooling provisions as past board to support retrofit into older Teslas

- Need to support inference batch size of one for lower latency

- 2Ghz (not pushing hard and keeping thermals under control)

- Full board small enough to fit behind the glove box

- Appears to be liquid cooled (not confirmed but likely to reduce space and placement constraints)

- 2 Neural Network Processors on a board each including a 96×96 multiply/add array

- 12x A72 cores at 2.2 Ghz

- Final board to support same I/O capabilities as previous board in same footprint with the same cooling design

Timeline for FSD ASIC design to production:

- Feb 2016: Pete Bannon hired at Tesla (background 12 years at PA Semi & Apple)

- Aug 2017: Design done (18 month design)

- Dec 2017: 1st chip back from Samsung

- Apr 2018: P0 Rev completed

- Jul 2018: Qualified final production part

- Dec 2018: Full software stack operational

- Mar 2019: Tesla Models S & X ship with new part

- Apr 2019: Tesla Model 3 ship with new part

- Note: They plan to allow upgrading any Tesla shipped with the full sensory array required to the new FSD hardware and software stack

Detail on the FSD ASIC:

- 37.5mm x 37.5mm Ball Grid Array (BGA) Package

- 2,116 external pins (balls).

- 12,464 C4 (Controlled Collapse Chip Connection) bumps

- Samsung 14nm FinFET CMOS process

- 260mm^2 (medium sized part that should yield well)

- 250 million gates

- 6B transistors

- Chip manufactured and tested to Automotive Electronics Council (AEC) Q100 criteria (Failure Mechanism Stress Test Qualification for Integrated Circuits)

Each ASIC includes:

- Camera Serial Interface supports 2.5G pixels/second

- On chip network delivers data from sensors to the two redundant memory systems

- 128b wide LPDDR4 memory operating at 4,266 Gbps per pin with a 68 GB/second peak aggregate bandwidth

- Integrated Image Signal Processor supporting 1G pixels/second with advanced tone mapping and noise reduction

- 96×96 multiply/add array

- Dedicated ReLU unit (report they also can support tan and sigmoid activation functions)

- 32M SRAM (minimize memory bandwidth required and reduce power)

- H.265 video encoder (backup camera and dash camera)

- GPU: 1 Ghz delivering 600 FLOPS and supporting FP32 and FP16

- 12x ARM A72 ARM cores operating at 2.2Ghz

- Safety system: Takes final plans from neural system and validates prior to exchanging plans with partner FSD ASIC to ensure results match prior to execution

- Security system: Ensures only Tesla software can run

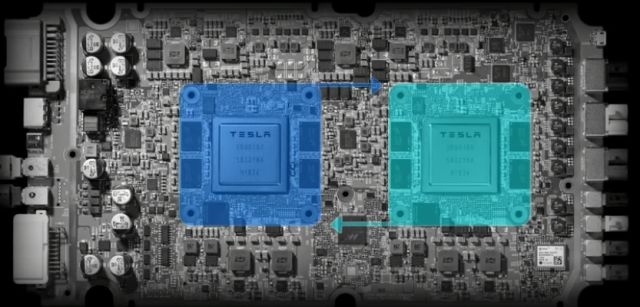

FSD board includes:

- Two independent computers

each with:

- An independent FSD ASIC at the core

- Independent power supply (PSU)

- Independent memory

- Independent flash storage

- Each compute system has access to the I/O:

- 8 Video cameras (4 on each PSU)

- RADAR

- GPS

- Inertial measurement system

- Ultrasonic sensors

- Wheel speed sensors

- Steering angle

- Maps

- Each cycle each can read:

- 256 bytes of activation data

- 128 bytes of weight data

- Each can deliver 36 TOPS each at 2Ghz for 72 TOPS aggregate

- Each system computes an independent plan and exchanges their computed plan with the other system. If the plans match, the plan is acted upon and requests are sent to the actuators that actually drive the car.

- Each system has access sensor data to validate that the actuators actually did the right thing

The Tesla competitive advantage:

- Vertical integration. One customer and one set of requirements

- 500k cars currently in the fleet all equipped with 8 cameras and other sensors

- Will have 1m cars in the on-the-road fleet in 1 year

- Everyone else has simulators but not the depth of real world miles

- Tesla has simulators as well but you can’t beat reality when it comes to events in the long tail

Elon’s take on LIDAR: LIDAR is a fool’s Errand. Anyone relying on LIDAR is doomed. Expensive sensors that are unnecessary.

Overall, it’s a nice design. They have adopted a conservative process node and frequency. They have taken a pretty much standard approach to inference by mostly leaning on a fairly large multiple/add array. In this case a 96×96 unit. What’s particularly interesting to me what’s around the multiply/add array and their approach to extracting good performance from a conventional low-cost memory subsystem. I was also interested in their use of two redundant inference chips per car with each exchanging results each iteration to detect errors before passing the final plan (the actuator instructions) to a safety system for validation before the commands are sent to the actuators. Performance and price/performance look quite good.

Full video: Tesla Autonomy Day (first content at 1:12)

Seems kind of astounding (but expected) that the timeline doesn’t mention Jim Keller. Also a little eyebrow raising that it doesn’t mention Chris Lattner.

It was an ASIC roadmap and Chris Lattner was working in a software role so I wouldn’t necessarily expect him to be mentioned. Jim Keller is a microprocessor designer so, if was part of the ASIC design, you would expect him to be mentioned. The impression I got is that when Jim was leading the hardware design team, they weren’t doing ASIC development and that work only started when Pete Bannon took over.

I am curious to see what they do in terms of storage of sensor data and inferential outcome data in case of a crash… for now the EDR records that they keep are very light, but I suspect there is a lot more being kept by Tesla for internal analysis and it will be very interesting to see whether legislation will eventually force them to make this type of data public.

You are probably right. Some will like it but many will argue it’s an invasion of privacy like accessing cell phone data and should require a warrant.

One other thing that comes to mind looking at your analysis is that Tesla is obviously banking on a very very DNN-heavy approach to full autonomy… this in itself is an interesting point of view, and of course corroborates Musk’s public statements… but they are really going whole hog, with little room for corrections if they suddenly realise that LiDAR, ToF etc… make sense.

It’s hard to know for sure without more detail on their part but I suspect they could use it for LIDAR processing as well. Generally they have a lot of assets at their disposal with a 96×96 multiply add unit, a dedicated ReLU unit (they claim support for other activation functions) 12 2.2 Gh ARM, and a small GPU. I suspect they would be fine if they needed to process LIDAR and they don’t sound close to wanting to :-).

>Each system computes an independent plan and exchanges their computed plan with the other system.

>If the plans match, the plan is acted upon and requests are sent to the actuators that actually drive the car

I wonder what they do when the results don’t match. How they decide what’s a fail-safe action? Alerting human is useless on that time scale, there’s no third chip, no visible arbiter on the board, might be no time to repeat computation

I was thinking exactly the same thing. The human operator SHOULD be ready to go but we know many aren’t close to ready even in semi-autonomous driving systems. On a full autonomous system, the odds of a human being ready to take over and not do anything stupid in the first few seconds out of surprise seems unlikely. Just giving up and changing nothing clearly doesn’t work. Slowing quickly has some risk as well and requires that the system is still able to take action in order to decelerate. It seems like there only reasonable solution is to always have a failsafe plan calculated. And, if the primary plan just calculated is failing validation, try again. If it still failing, execute the last known good backup plan. The backup plan would always be computed in parallel with the primary plan but the backup plan goals would be the safest shutdown to a safe location (e.g. pull over on the shoulder on a highway).

I suspect the first solutions will be to return control to a human but that clearly isn’t going to be a great choice especially as the driver gets used to this “never happening.”

Chip from safety control at 1:23:36 Control Validation could be part of a answer

Yes, at that point he describes the safety system as two CPUs that move together in lockstep and are the final arbitrators of whether the plan is safe to send to the actuators. But, what’s not said is what happens if the plan does not pass? Presumably the system can try again a few times while the car continues with executing new plans but, if the system can’t get a good plan, what does it do? Just throwing up it’s (virtual) hands and giving a car to a human at 70 MPH in a corner might not work well. Bringing the car to a controlled stop seems like the only reasonable option but coming to a stop requires a plan be executed as well.

A cool solution that only costs 1 more FSD is to always compute a third plan that is the control safe exit plan. If it’s validated and ready to go each iteration, then if it becomes impossible to compute new plans, the safe exit plan can be executed. However, it may be the case that this simply isn’t worth the cost, complexity, and power. We know this isn’t implemented but what’s not clear what Tesla does if it can’t get a plan to execute.

There is no need for an additional full computer to compute a plan. Presumably, 99% of the performance cost is in interpreting the world. Plan construction probably is rather cheap.

I think the failsafe plan technique is a very nice solution. The plan would have to be extremely simple because when the FSD fails there is no further sensory input. So the plan likely would be comprised of a deceleration curve and a steering curve. It would last for a few seconds worth of time until the car has stopped. This stopping action would not achieve full safety but it’s enough as a failsafe.

Elon always stresses that autonomous vehicles only have to be safer than a human driver. He is right about that. So it’s a valid design decision to very rarely bring the car to a mildly unsafe stop.

I agree that, given the option of having a failsafe plan or just giving up and giving control to a driver that wasn’t expecting to be driving, the fail safe plan looks like an obvious win. Even a simple failsafe plan of doing a controlled stop is much better than returning to the user when the system enters an unsafe sate.

Yes, and as soon as possible there will be no steering will. Tesla needs to plan for that kind of future.

From my perspective, once a car is “full self driving” the driver won’t expect to take control so they transferring to the operating won’t be safe even if there is a steering wheel. So the choices appear to be to make any sort of FSD fault so rare that consumers, jurisdictional authorities, and legislators can live with the fault rate or, if that’s not possible, then ensure there is some form of safe shutdown when the system enters an unsafe state.

Even triple redundancy is no guarantee:

https://www.theregister.com/2021/09/06/a330_computer_failure/

Interesting read. It looks like the flight control system saw excess pilot input on rudders when switching modes between lateral control flight and lateral ground and the system locked out. Having the lateral ground lock-out failure mode be no spoilers, no reverse thrust, and no auto-breaking leaving the pilots only manual breaking seems like a poor choice. I would expect the safest failure mode for an aircraft on the ground would be stationary.

The more important safety issue is why the transition between the two flight modes led to control system lock-out. It sounded the mode switch problem was pilot rudder pedal input differed greatly from current rudder position during the mode switch.

Getting complex control systems right in normal operation is hard and mode switches are particularly challenging even on far simpler control systems.

Really interesting stuff. Any thoughts on Tesla’s comparison to NVIDIA’s Xavier + NVIDIA’s response? https://blogs.nvidia.com/blog/2019/04/23/tesla-self-driving/

NVIDIA’s arguing that Pegasus would be better comparison, yet the price / performance on Tesla’s design seems to be a lot better regardless. But this really isn’t my area of expertise…

I agree that Tesla’s part looks better but they are close enough in performance that using either Tesla or Nvidia shouldn’t change any car companies chance of producing a great self driving car. It looks like either part could be used successfully. Clearly if Nvidia were slightly better, Tesla would no longer use them. And, if the Tesla part is slightly better, the rest of the industry will still use Nvidia or some other competitive part.

Agree. The next years will probably be quite interesting in terms of competition between Tesla and Nvidia. Whoever is winning the race, competition is good.

One minor edit, the hardware video encoder accelerates H.265. It’s at 1:23:00 in the video.

https://youtu.be/Ucp0TTmvqOE?t=4980

Thanks for the correction Ryan.

great level of detail, there is a typo in the timeline: august 2018 vs 2017 for the « design done »

Thanks for the correction Olivier. I’ve made the update.