Archive For The “Hardware” Category

Facebook released Flashcache yesterday: Releasing Flashcache. The authors of Flashcache, Paul Saab and Mohan Srinivasan, describe it as “a simple write back persistent block cache designed to accelerate reads and writes from slower rotational media by caching data in SSD’s.” There are commercial variants of flash-based write caches available as well. For example, LSI has…

High scale network research is hard. Running a workload over a couple of hundred servers says little of how it will run over thousands or tens of thousands servers. But, having 10’s of thousands of nodes dedicated to a test cluster is unaffordable. For systems research the answer is easy: use Amazon EC2. It’s an…

There is a growing gap between memory bandwidth and CPU power and this growing gap makes low power servers both more practical and more efficient than current designs. Per-socket processor performance continues to increase much more rapidly than memory bandwidth and this trend applies across the application spectrum from mobile devices, through client, to servers….

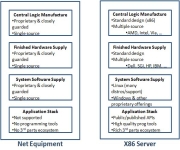

The networking world remains one of the last bastions of the mainframe computing design point. Back in 1987 Garth Gibson, Dave Patterson, and Randy Katz showed we could aggregate low-cost, low-quality commodity disks into storage subsystems far more reliable and much less expensive than the best purpose-built storage subsystems (Redundant Array of Inexpensive Disks). The…

For several years I’ve been interested in PUE<1.0 as a rallying cry for the industry around increased efficiency. From PUE and Total Power Usage Efficiency (tPUE) where I talked about PUE<1.0: In the Green Grid document [Green Grid Data Center Power Efficiency Metrics: PUE and DCiE], it says that “the PUE can range from 1.0…

Very low-power scale-out servers — it’s an idea whose time has come. A few weeks ago Intel announced it was doing Microslice servers: Intel Seeks new ‘microserver’ standard. Rackable Systems (I may never manage to start calling them ‘SGI’ – remember the old MIPS-based workstation company?) was on this idea even earlier: Microslice Servers. The…

Sometime back I whined that Power Usage Efficiency (PUE) is a seriously abused term: PUE and Total Power Usage Efficiency. But I continue to use it because it gives us a rough way to compare the efficiency of different data centers. It’s a simple metric that takes the total power delivered to a facility (total…

In an earlier post Andy Bechtolsheim at HPTS 2009 I put my notes up on Andy Bechtolsheim’s excellent talk at HPTS 2009. His slides from that talk are now available: Technologies for Data Intensive Computing. Strongly recommended. James Hamilton e: jrh@mvdirona.com w: http://www.mvdirona.com b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

I’ve attached below my rough notes from Andy Bechtolsheim’s talk this morning at High Performance Transactions Systems 2009. The agenda for HPTS 2009 is up at: http://www.hpts.ws/agenda.html. Andy is a founder of Sun Microsystems and of Arista Networks and is an incredibly gifted hardware designer. He’s consistently able to innovate and push the design limits…

I attended the Stanford Clean Slate CTO Summit last week. It was a great event organized by Guru Parulkar. Here’s the agenda: 12:00: State of Clean Slate — Nick McKeown, Stanford 12:30:00pm: Software defined data center networking — Martin Casado, Nicira 1:00: Role of OpenFlow in data center networking — Stephen Stuart, Google 2:30: Data…

I love Solid State Disks and have written about them extensively: SSD versus Enterprise SATA and SAS disks Where SSDs Don’t Make Sense in Server Applications Intel’s Solid State Drives When SSDs Make Sense in Client Applications When SSDs Make Sense in Server Applications 1,000,000 IOPS Laptop SSD Performance Degradation Problems Flash SSD in 38%…

In past posts such as Web Search Using Small Cores I’ve said “Atom is a wonderful processor but current memory managers on Atom boards don’t support Error Correcting Codes (ECC) nor greater than 4 gigabytes of memory. I would love to use Atom in server designs but all the data I’ve gathered argues strongly that…

Data center networks are nowhere close to the biggest cost or the even the most significant power consumers in a data center (Cost of Power in Large Scale Data Centers) and yet substantial networking constraints loom large just below the surface. There are many reasons why we need innovation in data center networks but let’s…

Microsoft’s Chicago data center was just reported to be online as of July 20th. Data Center Knowledge published an interesting and fairly detailed report in: Microsoft Unveils Its Container-Powered Cloud. Early industry rumors were that Rackable Systems (now SGI but mark me down as confused on how that brand change is ever going to help…

I recently came across an interesting paper that is currently under review for ASPLOS. I liked it for two unrelated reasons: 1) the paper covers the Microsoft Bing Search engine architecture in more detail than I’ve seen previously released, and 2) it covers the problems with scaling workloads down to low-powered commodity cores clearly. I…

This is 100% the right answer: Microsoft’s Chiller-less Data Center. The Microsoft Dublin data center has three design features I love: 1) they are running evaporative cooling, 2) they are using free-air cooling (air-side economization), and 3) they run up to 95F and avoid the use of chillers entirely. All three of these techniques were…