Chris Page, Director of Climate & Energy Strategy at Yahoo! spoke at the 2010 Data Center Efficiency Summit and presented Yahoo! Compute Coop Design.

Chris reports the idea to orient the building such that the wind force on the external wall facing the dominant wind direction and use this higher pressure to assist the air handling units was taken from looking at farm buildings in the Buffalo, New York area. An example given was the use of natural cooling in chicken coops.

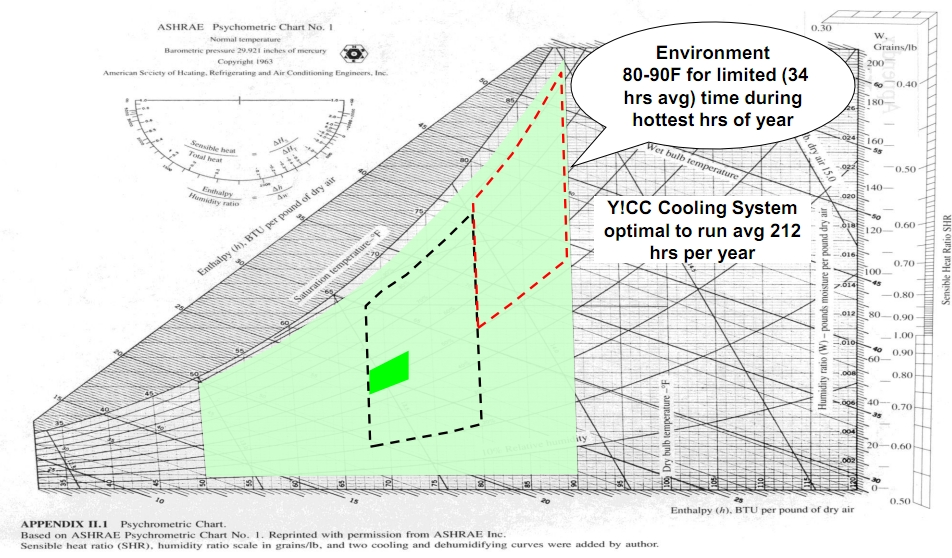

Chiller free data centers are getting more common (Chillerless Data Center at 95F) but the design approach is still far from common place. The Yahoo! team reports they run the facility at 75F and use evaporative cooling to attempt to hold the server approach temperatures down below 80F using evaporative cooling. A common approach and the one used by Microsoft in the chillerless datacenter at 95F example is install low efficiency coolers for those rare days when they are needed on the logic that low efficiency is cheap and really fine for very rare use. The Yahoo! approach is to avoid the capital cost and power consumption of chillers entirely by allowing the cold aisle temperatures to rise to 85F to 90F when they are unable to hold the temperature lower. They calculate they will only do this 34 hours a year which is less than 0.4% of the year.

Reported results:

· PUE at 1.08 with evaporative cooling and believe they could do better in colder climates

· Save ~36 million gallons of water / yr, compared to previous water cooled chiller plant w/o airside economization design

· Saves over ~8 million gallons of sewer discharge / yr (zero discharge design)

· Lower construction cost (not quantified)

· 6 months from dirt to operating facility

Chris’ slides: http://dcee.svlg.org/images/stories/pdf/DCES10/airandwatercafe130.pdf (the middle slides of the 3 slide deck set)

Thanks to Vijay Rao for sending this my way.

–jrh

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

We need more information about energy use at different temperatures for servers, storage, and switches.

Even two data points would be useful: energy use at 25C, and energy use at maximum operating temperature, typically 35C. Ideally, we could get an energy use curve from 20C to max operating temperature for any device configuration – some options use more power than others.

That information would help us make rational cost/benefit tradeoffs between higher operating temperatures, higher server energy consumption, lower HVAC energy consumption, and different HVAC designs.

APC publishes load versus efficiency curves for many of their UPS. This helps us make decisions about UPS loads and system designs versus UPS power consumption.

Otherwise, we must test in our own labs each proposed combination of device, configuration, and temperature. That’s a reasonable expense if you are buying large quantities of identical devices; much less so for smaller quantities.

Thanks for the correction. I try to avoid reference to the speaker’s sex by saying "they said" rather than "he/she said" since its not relevant in this context.

–jrh

FYI, Chris Page from Yahoo! is a woman. You might want to correct your article.

The power densities in most facilities are so high that passive cooling would be a challenge. 2.5 to 3 MW for a small room. That’s a lot of power in a small area. Not impossible but difficult to make work economically.

–jrh

On the same theme, it would be interesting to see if the same passive cooling/ventilation techniques that are being used in the BSkyB TV studios in London could be applied to city centre datacentres – http://www.detail.de/artikel_bskyb-hq-london-arup_27266_En.htm

Your right that PUE is full of flaws: //perspectives.mvdirona.com/2010/05/25/PUEIsStillBrokenAndIStillUseIt.aspx.

As you point out Rocky, its easy to design systems that can run (possibly) badly at 35C (95F). In fact, every server out there is warranted to 35C and some even higher. The real test is what is the power draw when operating at full operating temperature. That separates the good mechanical designs from the power hogs.

This quote from one slide is interesting:

"Make efforts to avoid server intake temps exceeding 80F as many ramp fans, which increase critical ($$) power usage."

One cost of running servers at higher temperatures is higher server power consumption – but we save on HVAC system construction and operating costs.

And PUE goes down. Be careful what you measure!

If we ask server manufacturers to design systems that run at higher temperatures – they could install higher-powered fans relatively cheaply.

And PUE goes down even further.

I don’t think that’s what we want.