Back in 2009, in Datacenter Networks are in my way, I argued that the networking world was stuck in the mainframe business model: everything vertically integrated. In most datacenter networking equipment, the core Application Specific Integrated Circuit (ASIC – the heart of a switch or router), the entire hardware platform for the ASIC including power and physical network connections, and the software stack including all the protocols all come from a single vender and there is no practical mechanism to make different choices. This is how the server world operated back 40 years ago and we get much the same result. Networking gear is expensive, interoperates poorly, is expensive to manage and is almost always over-subscribed and constraining the rest of the equipment in the datacenter.

Further exaggerating what is already a serious problem, unlike the mainframe server world of 40 years back, networking equipment is also unreliable. Each has 10s of millions of lines of code under the hood forming frustratingly productive bug farms. Each fault is met with a request from the vendor to “install the latest version” – the new build is usually different than what was previously running but “different” isn’t always good when running production systems. It’s just a new set of bugs to start chasing. The core problem is many customers ask for complex features they believe will help make their network easier to manage. The networking vendor knows delivering these features keeps the customer uniquely dependent upon that vendor’s single solution. One obvious downside is the vendor lock-in that follows from these helpful features but the larger problem is these extensions are usually not broadly used, not well tested in subsequent releases, and the overall vendor protocol stacks become yet more brittle as they become more complex aggregating all these unique customer requests. This is an area in desperate need for change.

Because networking gear is complex and, despite them all implementing the same RFCs, equipment from different vendors (and sometimes the same vendor) still interoperates poorly. It’s very hard to deliver reliable networks at controllable administration costs from multiple vendors freely mixing and matching. The customer is locked in, the vendors know it, and the network equipment prices reflect that realization.

Not only is networking gear expensive absolutely but the relative expensive of networking is actually increasing over time. Tracking the cost of networking gear as a ratio of all the IT equipment (servers, storage, and networking) in a data center, a terrible reality emerges. For a given spend on servers and storage, the required network cost has been going up each year I have been tracking it. Without a fundamental change in the existing networking equipment business model, there is no reason to expect this trend will change.

Many of the needed ingredients for change actually have been in place for more than half a decade now. We have very high function networking ASICs available from Broadcom, Marvell, Fulcrum (Intel), and many others. Each competes with the others driving much faster innovation and ensuring that cost decreases are passed on to customers rather than simply driving more profit margin. Each ASIC design house produces references designs that are built by multiple competing Original Design Manufacturers each with their own improvements. Taking the widely used Broadcom ASIC as an example, routers based upon this same ASIC are made by Quanta, Accton, DNI, Foxconn, Celestica, and many others. Each competes with the others driving much faster innovation and ensuring that the cost decreases are passed on to customers rather than further padding networking equipment vendor margins.

What is missing is high quality control software, management systems, and networking protocol stacks that can run across a broad range of competing, commodity networking hardware. It’s still very hard to take merchant silicon ASICs packaged in ODM produced routers and deploy production networks. Very big datacenter operators actually do it but it’s sufficiently hard that this gear is largely unavailable to the vast majority of networking customers.

One of my favorite startups, Cumulus Networks, has gone after exactly the problem of making ODM produced commodity networking gear available broadly with high quality software support. Cumulus supports a broad range of ODM produced routing platforms built upon Broadcom networking ASICs. They provide everything it takes above the bare metal router to turn an ODM platform into a production quality router. Included is support for both layer 2 switching and layer 3 routing protocols including OSPF (v2 and V3) and BGP. Because the Cumulus system includes and is hosted on a Linux distribution (Debian), many of the standard tools, management, and monitoring systems just work. For example, they support Puppet, Chef, collectd, SNMP, Nagios, bash, python, perl, and ruby.

Rather than implement a proprietary device with proprietary management as the big networking players typically do, or make it looks like a CISCO router as many of the smaller payers often do, Cumulus makes the switch look like a Linux server with high-performance routing optimizations. Essentially it’s just a routing optimized Linux server.

The business model is similar to Red Hat Linux where the software and support are available on a subscription model at a price point that makes a standard network support contract look like the hostage payout that it actually is. The subscription includes entire turnkey stack with everything needed to take one of these ODM produced hardware platforms and deploy a production quality network. Subscriptions will be available directly from Cumulus and through an extensive VAR network.

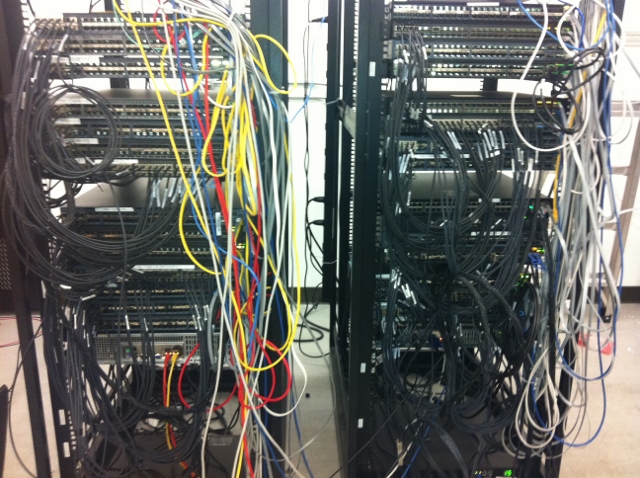

Cumulus supported platforms include Accton AS4600-54T (48x1G & 4x10G), Accton AS5600-52x (48x10G & 4x40G), Agema (DNI brand) AG-6448CU (48x1G & 4x10G), Agema AG-7448CU (48x10G & 4x40G), Quanta QCT T1048-LB9 (48x1G & 4x10G), and Quanta QCT T-3048-LY2 (48x10G & 4x40G). Here’s a picture of many of these routing platforms from the Cumulus QA lab:

.jpg)

In addition to these single ASIC routing and switching platforms, Cumulus is also working on a chassis-based router to be released later this year:

.jpg)

This platform has all the protocol support outlined above and delivers 512 ports of 10G or 128 ports of 40G in a single chassis. High-port count chassis-based routers have always been exclusively available from the big, vertically integrated networking companies mostly because high-port count routers are expensive to design and are sold in lower volumes than the simpler, single ASIC designs commonly used as Top of Rack or as components of aggregation layer fabrics. Cumulus and their hardware partners are not yet ready to release more details on the chassis but the plans are exciting and the planned price point is game changing. Expect to see this later in the year.

Cumulus Networks was founded by JR Rivers and Nolan Leake in early 2010. Both are phenomenal engineers and I’ve been huge fans of their work since meeting them as they were first bringing the company together. They raised seed funding in 2011 from Andreessen Horowitz, Battery Ventures, Peter Wagner, Gurav Garv, Mendel Rosenblum, Diane Greene, and Ed Bugnion. In mid-2012, they did an A-round from Andreessen Horowitz and Battery Ventures

The pace of change continues to pick up in the networking world and I’m looking forward to the formal announcement of the Cumulus chassis-based router.

–jrh

Charlie said "At the core of all of this traffic, we must have the most efficient packet processing platform possible – which is not the standard Linux networking stack. The per-packet overheads and latencies of the standard stack are well understood, as is the fact that it does not exploit the multicore and acceleration capabilities of today’s processors. Standard hypervisors also limit the performance of virtual appliances thanks to the way that they handle VM-to-VM traffic."

Charlie, I think there may be some confusion here. The routers referenced above are using a Linux-based control plane but all packet processing is 100% in hardware with no Linux interaction. These routers are 100% hardware implemented packet processors.

–jrh

Your comment on the need for high-quality control, management and networking protocol stack software is spot on. The most visible trend in the networking world today is the move from an inflexible hardware-centric networking model to a more flexible software-centric approach that exploits commodity hardware, leverages open standards and supports more rapid solution development and innovation. The activities in the Software-defined Networking and Network Functions Virtualization areas are just two examples of software-based innovation.

Networking protocol stack technology is particularly important, especially from a performance perspective. Today, we are not only driving more packets through our networks simply to keep up with user demands (especially for video), but we are also introducing new types of traffic into our networks (for example, the explosion in “East-West” traffic brought on by the increasing number of VMs running in today’s multi-tenant data centers).

At the core of all of this traffic, we must have the most efficient packet processing platform possible – which is not the standard Linux networking stack. The per-packet overheads and latencies of the standard stack are well understood, as is the fact that it does not exploit the multicore and acceleration capabilities of today’s processors. Standard hypervisors also limit the performance of virtual appliances thanks to the way that they handle VM-to-VM traffic.

One example of an advanced, high-performance networking stack comes from 6WIND, a 10 year old company that offers a software solution deployed by many leading OEMs. This software supports standard Linux networking APIs while implementing a fast path data plane that avoids the inherent performance constraints of the standard Linux networking stack. Typically, this gives a 10x performance improvement over the standard Linux stack, with full support for virtualized implementations. Learn more at http://www.6wind.com.

Charlie Ashton

VP of Marketing and Business Development

6WIND

The Arista 7150 is an impressive procdut, with a very low-latency of 350ns, and NAT a0and VXLAN support. It hasa024, 52 or 64 10GbE interfaces with a 1.28Tbps switching capacity. Arista is touting its advanced software capabilities and the benefits of EOS coupled with the platform as making it ideal for modern datacenter applications like Big Data/Hadoop, high-performance computing and high-frequency trading. In fact, with NAT support built in, this platform could be ideal for latency-sensitive deployments that traditionally wasted 100s of milliseconds NATing through load-balancers think financial platforms and algorithmic trading. I’m certain that the financial services and cloud service providers, already strong markets for Arista, are all over this new platform (available in volume very shortly) and potentially ditching traditional vendors like Cisco. (Editor Note: a0Cisco’s announced the Nexus 3548 today to compete with Arista)

@Ken:

Those are 4 different boxes from 4 different vendors.

From a feature/form-factor standpoint, they are identical, but there are differences under the hood. Cumulus Linux abstracts away those differences so that you don’t have to change anything in software/config if you change hardware vendor.

– nolan

I’m inclined to believe the four 10G devices on the Cumulus Network HCL are the same one.

Accton is Taiwanese

the AS5600-52X, 48 x 10G-SFP+ and 4 x 40G-QSFP+ switch is visible here:

http://www.accton.com/ProdDtl.asp?sno=DHNJFH

The Quanta QCT T3048-LY2 is visible here:

http://www.quantaqct.com/en/01_product/02_detail.php?mid=30&sid=114&id=116&qs=97

Again it looks like the Accton switch.

The specifications for the Agema (Italian) and Penguin Computing device on the cumulusnetworks.com site seem remarkably similar to the Accton one. I wasn’t able to locate a product page for either of these devices.

Agema is mentioned in English here:

http://www.gmdu.net/corp-559018.html

Hi James, and welcome back! It’s always refreshing to see gratuitous complexity put into it’s proper perspective. Thanks!

Btw, is there any truth to the rumor that your seafaring blogging proclivities inspired Google to launch its first Loon balloon from NZ?

;)

Frank