I invited Nikhil Handigol to present at Amazon earlier this week. Nikhil is a Phd candidate at Stanford University working with networking legend Nick McKeown on the Software Defined Networking team. Software defined networking is an concept coined by Nick where the research team is separating the networking control plane from the data plane. The goal is a fast and dumb routing engine with the control plane factored out and supporting an open programming platform.

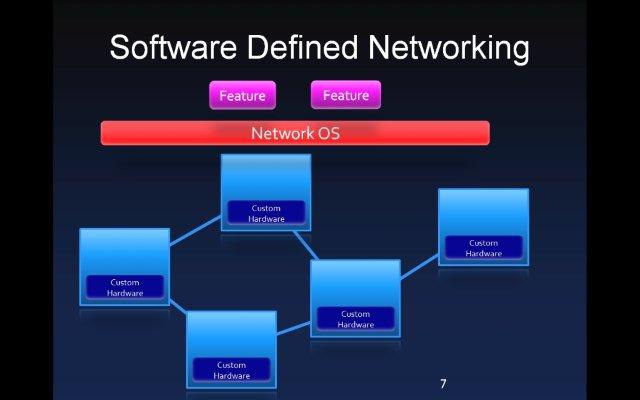

From Nikil’s presentation, we see the control plane hoisted up to a central, replicated network O/S configuring the distributed routing engines in each switch.

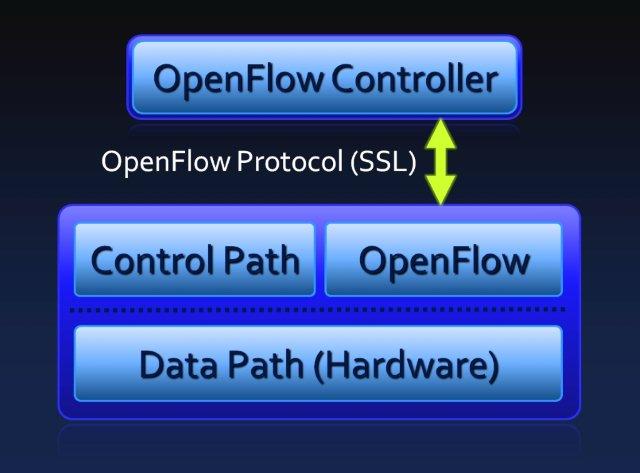

One implementation of software defined networking is OpenFlow where each router supports the OpenFlow protocol and a central OpenFlow Controller computes routing tables that are installed in each router:

What makes OpenFlow especially interesting is that it’s simple, easy to implement, and getting broad industry support with the Open Networking Foundation as the central organizing body. The Open Networking Foundation’s primary mission is to advance software defined networking using OpenFlow as the protocol. Founding members of the Open Networking Foundation are Deutsche Telekom, Facebook, Google, Microsoft, Verizon, and Yahoo!. Also included are networking equipment providers including: Broadcom, Dell, Cisco, Force10, HP, Juniper, Marvell, Mellanox, and many others.

Today, most networking equipment is shipped as a vertically integrated stack including both the control and data planes. There are many reasons why this is not good for the industry. The Stanford team argues it blocks innovation in that researches can’t try new protocols with a closed stack without a programming model. I agree. This is a problem for both academia and industry but my dislike of the current model is much broader. In Networking: The Last Bastion of Mainframe Computing, I made the case that this vertically integrated approach is artificially holding prices high and slowing the pace of innovation. A quick summary of the argument:

When networking equipment is purchased, it’s packaged as a single sourced, vertically integrated stack. In contrast, in the commodity server world, starting at the most basic component, CPUs are multi-sourced. We can get CPUs from AMD and Intel. Compatible servers built from either Intel or AMD CPUs are available from HP, Dell, IBM, SGI, ZT Systems, Silicon Mechanics, and many others. Any of these servers can support both proprietary and open source operating systems. The commodity server world is open and multi-sourced at every layer in the stack.

Open, multi-layer hardware and software stacks encourage innovation and rapidly drive down costs. The server world is clear evidence of what is possible when such an ecosystem emerges.

I’m excited about software defined networking because it provides a clean interface allowing switch providers to both innovate and compete. An additional benefit is that SDN allows innovation and experimentation at the network protocol layer.

In Nikil’s talk last week at Amazon, he explored integrating load balancing functionality into the network routing fabric. The team started with the hypothesis that load balancing is really just smart routing. They then implemented a distributed load balancing fabric by adding load balancing support to network routers using Software Defined Networking. Essentially they distribute the load balancing functionality throughout the network. What’s unusual here is that the ideas could be tested and tried over a 9 campus, North American wide network with only 500 lines of code. With conventional network protocol stacks, this research work would have been impossible in that vendors don’t open up protocol stacks. And, even if they did, it would have been complex and very time consuming.

- Nikil’s slides from his Amazon Talk: http://www.mvdirona.com/jrh/TalksAndPapers/Handigol-Amazon-May2011.pdf

–jrh

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

Exactly. Freedom to experiment and a platform stable rich enough and simple enough that stable production solutions can be built.

–jrh

I’m also excited because OpenFlow will support non-TCP/IP protocols, loosening the "tyranny of IP" for protocol design. In a converged network, we might want IP, AoE, FCOE, and protocols yet to be invented, to be moved around one network, using non-proprietary switches and routers.