Yesterday the Top 500 Supercomputer Sites was announced. The Top500 list shows the most powerful commercially available supercomputer systems in the world. This list represents the very outside of what supercomputer performance is possible when cost is no object. The top placement on the list is always owned by a sovereign funded laboratory. These are the systems that only government funded agencies can purchase. But they have great interest for me because, as the cost of computing continues to fall, these performance levels will become commercially available to companies wanting to run high scale models and data intensive computing. In effect, the Top500 predicts the future so I’m always interested in the systems on the list.

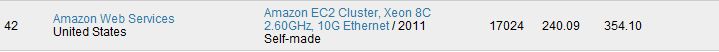

What makes this list of the fastest supercomputers in the world released yesterday particularly unusual can be found at position #42. 42 is an anomaly of the first order. In fact, #42 is an anomaly across enough dimensions that its worth digging much deeper.

Virtualization Tax is Now Affordable:

I remember reading through the detailed specifications when the Cray 1 supercomputer was announced and marveling that it didn’t even use virtual memory. It was believed at the time that only real-mode memory access could deliver the performance needed.

We have come a long way in the nearly 40 years since the Cray 1 was announced. This #42 result was run not just using virtual memory but with virtual memory in a guest operating system running under a hypervisor. This is the only fully virtualized, multi-tenant super computer on the Top500 and it shows what is possible as the virtualization tax continues to fall. This is an awesome result and many more virtualization improvements are coming over the next 2 to 3 years.

Commodity Networks can Compete at the Top of the Performance Spectrum:

This is the only Top500 entrant below number 128 on the list that is not running either Infiniband or a proprietary, purpose-built network. This result at #42 is an all Ethernet network showing that a commodity network, if done right, can produce industry leading performance numbers.

What’s the secret? 10Gbps directly the host is the first part. The second is full non-blocking networking fabric where all systems can communicate at full line rate at the same time. Worded differently, the network is not oversubscribed. See Datacenter Networks are in my Way for more on the problems with existing datacenter networks.

Commodity Ethernet networks continue to borrow more and more implementation approaches and good network architecture ideas from Infiniband, scale economics continues to drive down costs so non-blocking networks are now practical and affordable, and scale economics are pushing rapid innovation. Commodity equipment in a well-engineered overall service is where I see the future of networking continuing to head.

Anyone can own a Supercomputer for an hour:

You can’t rent supercomputing time by the hour from Lawrence Livermore National Laboratory. Sandia is not doing it either. But you can have a top50 supercomputer for under $2,600/hour. That is one of the world’s most powerful high performance computing systems with 1,064 nodes and 8,512 cores for under $3k/hour. For those of you not needing quite this much power at one time, that’s $0.05/core hour which is ½ of the previous Amazon Web Services HPC system cost.

Single node speeds and feeds:

· Processors: 8-core, 2 socket Intel Xeon @ 2.6 Ghz with hyperthreading

· Memory: 60.5GB

· Storage: 3.37TB direct attached and Elastic Block Store for remote storage

· Networking: 10Gbps Ethernet with full bisection bandwidth within the placement group

· Virtualized: Hardware Assisted Virtualization

· API: cc2.8xlarge

Overall Top500 Result:

· 1064 nodes of cc2.8xlarge

· 240.09 TFlops at an excellent 67.8% efficiency

· $2.40/node hour on demand

· 10Gbps non-blocking Ethernet networking fabric

Database Intensive Computing:

This is a database machine masquerading as a supercomputer. You don’t have to use the floating point units to get full value from renting time on this cluster. It’s absolutely a screamer as an HPC system. But it also has the potential to be the world’s highest performing MapReduce system (Elastic Map Reduce) with a full bisection bandwidth 10Gbps network directly to each node. Any database or general data intensive workload with high per-node computational costs and/or high inter-node traffic will run well on this new instance type.

If you are network bound, compute bound, or both, the EC2 cc2.8xlarge instance type could be the right answer. And, the amazing thing is that the cc2 instance type is ½ the cost per core of the cc1 instance.

Supercomputing is now available to anyone for $0.05/core hour. Go to http://aws.amazon.com/hpc-applications/ and give it a try. You no longer need to be a national lab or a government agency to be able run one of the biggest supercomputers in the world.

–jrh

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

We’ve done a couple of Top500 runs just to show that clusters can credibly support high performance computing workloads and even large workloads can be efficiently run. But, as fun as the benchmarks are, they really aren’t our focus. Its real customers running real workloads that we find most exciting.

I’m guessing that we won’t do any new benchmark runs next year but that is is me speculating.

–jrh

Congratulations! At this rate, we expect you in the top 10 next year.

What about the "Graph 500" (http://www.graph500.org/) list? It’s supposed to be designed for data-intensive applications, and there was a little buzz about it around SC’11. It would be interesting to see how EC2 performs on this benchmark…

Cray 1 porn is enough. Thank you for the geeky numbers. Truly a milestone in computing history.

You got me on that one Fiism. I would love to show a picture. But I love working at AWS as well so I just can’t do it :-). I wish I could.

–jrh

This post is useless without pictures (of your top50 supercomputer). And no, you can distract us with 70s-brown Cray-1 porn :-).