This is an exciting day and one I’ve been looking forward to for more than a decade. As many of you know, the gestation time for a new innovation at AWS can incredibly short. Some of our most important services went from good ideas to successful, heavily-used services in only months. But, custom silicon is different. The time from idea to a packaged processor is necessarily longer than software-only solutions. And, while the famed Amazon two-pizza team can often deliver an excellent service in months, a custom processor takes far longer to get right and engineering costs will be tens of millions and could easily escalate into the hundreds of millions of dollars. These are big investments that require high volumes to be economically justified. Today I’m super happy to be able to talk about the AWS Graviton Processor that powers the Amazon EC2 A1 Instance family.

I’ve seen the potential for Arm-based server processors for more than a decade, but it takes time for all the right ingredients to come together. What initially excited me about the possibilities of an Arm server was the unique Arm ecosystem itself. In the early days of servers, a single company designed the processors, the system boards, the chassis, and all system software including the operating system. As the Intel x86 compatible processor began to be adopted for server workloads, the industry took a big departure from this model. Now one company (and, on the good days, two companies :-)) produce the processors, multiple competing server hardware designers build the servers, and operating systems are available from multiple suppliers. More participants and competition drives innovation and the server world began to move more quickly.

The Arm ecosystem is even more de-verticalized. Arm does the processor design, but they license the processor to companies that integrate the design in their silicon rather than actually producing the processor themselves. This enables a diverse set of silicon producers, including Amazon, to innovate and specialize chips for different purposes, while taking advantage of the extensive Arm software and tooling ecosystem. Most of companies that are producing silicon that license Arm technology are fabless semiconductor companies, which is to say they are in the semiconductor business but outsource the manufacturing of silicon chips in massively expensive facilities to specialized companies like Taiwan Semiconductor Manufacturing Company (TSMC) and Global Foundries.

Innovation and competition is at every layer of the Arm ecosystem from design, through silicon foundry, packaging, board manufacturing, all the way through to finished hardware systems and the system software that runs on top. Having many competitors at every layer in the ecosystem drives both rapid innovation and excellent value. Because these processors are used incredibly widely in many very different applications, the volumes are simply staggering. There have been 90 billion Arm processors shipped by this vast ecosystem, and nothing reduces costs in the semiconductor world faster than part volume.

I like to think I can see much of the change that sweeps our industry from a fair distance off but, back when I joined AWS in 2009, I wouldn’t have predicted we would be designing server processors less than a decade later. Each year, engineering challenges that seemed unaddressable even a year back become both within reach but also cost effective. Its exciting time to be an engineer, and our industry has never been delivering customer value faster.

The AWS Graviton Processor powering the Amazon EC2 A1 Instances targets scale-out workloads such as web servers, caching fleets, and development workloads. These new instances feature up to 45% lower costs and will join the 170 different instance types supported by AWS, ranging from the Intel-based z1d instances which deliver a sustained all core frequency of 4.0 GHz, a 12 TB memory instance, the F1 instance family with up to 8 Field Programmable Gate Arrays, P3 instances with NVIDIA Tesla V100 GPUs, and the new M5a and R5a instances with AMD EPYC Processors. No other cloud offering even comes close.

The new AWS-designed, Arm-based A1 instances are available in 5 different instances types from 1 core with 2 GiB of memory up to 16 cores with 32 GiB of memory.

I’ve been interested in Arm server processors for more than a decade so its super exciting to see the AWS Graviton finally public, it’s going to be exciting to see what customers do with the new A1 instances, and I’m already looking forward to follow-on offerings as we continue to listen to customers and enhance the world’s broadest cloud computing instance selection. There is much more coming both in Arm-based instance offerings and, more broadly, across the entire of the Amazon EC2 family.

Excellent.

I wrote an article on ARM servers here(https://www.linkedin.com/pulse/arm-server-lessons-buy-side-retrospective-fang-qiu/). And quote your comments on traditional arm servers.

Even I have an aggressive plan to design cloud servers using consumer silicon – mobile soc have ARM cores, GPUs, video accelerators and AI accelerators in it. The cost is lower than server silicon from a performance/dollar perspective. The silicon cost is amortized by billions of smartphone users.

Take an example:

ARM side(kirin980): 4 core cortex-a76(specint2k6 ~26), assume an 10w TDP and $100 price. Then $25/2.5w per core.

x86 side(Platium 8160): 24core48thread, list price $4700, TDP 150w. Then $97/3.15w per logic core.

ARM chip is MUCH cheaper and much power-efficient than x86….:) And ARM have free GPU/AI accelerator on chip…

As I understand it ARM processors use less energy which is good however isn’t ARM processors also (significantly?) slower compared to Intel server processors with their special op code features as in SSE, SSE2, etc? Are you aiming for lower energy consumption and are willing to take the performance hit?

What about heat production? The processor in smartphones and other portable tech get really hot for doing nearly no work at all. Do these new processors run cooler also, heating issues fixed?

ARM processors have long been leaders in low power operation since one of their most important market segments is mobile computing. Lower power does reduce cost and is part of why these processors produce a better price/performance solution for some workloads. Single thread performance is generally less than competing X86s today so, for non-parallel single thread, CPU bound operations, they are less likely to win. But, don’t think of ARM servers as weak and slow. The Department of Energy took delivery earlier this year of ASTRA an ARM-based super computer. The workloads they are targeting are memory intensive (very common) and the Cavium Thunder processor (an ARM Server) has excellent memory interconnect performance so it ends up delivering supercomputer performance.

The heat problem you describe on some mobile processors is actually the processor being super busy. ARM are very power efficient and don’t really have the “heat problem” that you mention. If you feel heat, there is a lot of power being consumed. What’s happening is the system is super busy and is actually consuming a large amount of power. When you feel your system get hot, it’s very busy. If you have nothing material running, the likely cause is the browser. Bad guys are frequently doing things like Bitcoin mining on stolen resources. If someone finds a security weakness in your system, they will use system resources to do things for them. Common jobs are Bitcoin mining and DDoS attacks. If your system gets hot, reboot it and it’ll likely recover. If you feel heat, it’s consume a lot of power and is very busy.

ARMs are an excellent choice for many server workloads and are used for everything from supercomputer applications, generalized, highly parallel server workloads, just about every mobile device, and embedded IoT processors. There is no more broadly deployed CPU architecture in the world.

When can we expect A1 ElastiSearch instances, A1 RDS instances and so on?

When a new instance type ends up being a good match for a given service and will give at least some customers lower latency, lower cost or material improvements in some dimension, the service teams will support it. There is a lot happening at once in all service teams so it usually doesn’t happen right away.

Thank you for the info.

Do the Nitro smart NIC chips also include ARM cores?

Are Graviton chips different silicon than the Nitro smart NIC chips or same silicon with the cores used in different ways?

Sorry Andy, that level of detail on Nitro isn’t currently externally available.

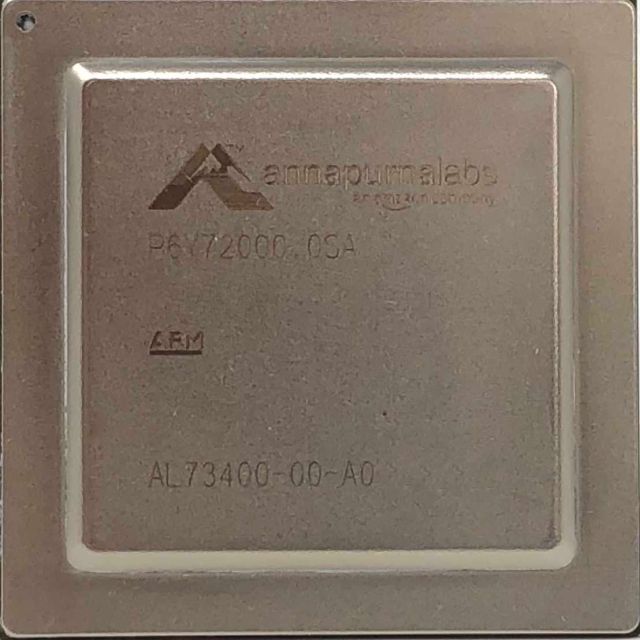

How many Cosmos Cortex-A72 cores are present in each Graviton processor?

The current generation Graviton supports 16 cores.

I’m holding out for RISC-V on AWS

I’m also a huge of the RISC-V effort. I love that it can be used freely by researchers, builders, educators, and even commercial designers without restriction. Open system are good for the industry and the RISC-V program is a huge step forward in making a credible processor design available externally.

What Arm brings to the table is massive volume with over 90 billion cores delivered. It is a commercially licensed rather than open sourced design but because they are amortizing their design costs over a very broad usage base, the cost per core is remarkably low. Still not free but surprisingly good value. Because Arms are used in such massive volume, there is a well developed software ecosystem, the development tools are quite good, and the Arm licensing model allows Arm cores to be part of special purpose ASICs. Arm cores can be inexpensive enough to be used in very low cost IoT devices. They perform well enough they can be used in specialized, often very expensive embedded devices. They are excellent power/performers and are used in just about every mobile device. And, they deliver an excellent price/performing and power/performing solution for server-side processing. It’s an amazingly broadly used architecture that continues to evolve quickly

Arm cores still do require licensing so they don’t have all of the characteristics you like in the RISC-V design but they recently made their ARM Cortext M33 processor available on the Amazon EC2 F1 instance type. The F1 instance type includes up to 8 large FPGAs and is used by customers to deliver hardware specialized workloads. The F1 instance type supports low-latency financial modeling, genomics research, database hardware acceleration and many other commercial applications. And, of course, the F1 instance type is also heavily used in education in semiconductor design courses. Now that Arm has made the M33 design available in the F1 instance type, experimenting with Arm based designs is now pretty easy and fairly inexpensive.

More on the Arm M33: https://developer.arm.com/products/processors/cortex-m/cortex-m33

More on the AWS F1 instance type: https://aws.amazon.com/ec2/instance-types/f1/

More on the Arm M33 on AWS F1: https://community.arm.com/arm-research/b/articles/posts/latest-arm-cortex-m-processor-now-available-on-the-cloud

I’m a big fan of the RISC-V design but there is lots to like about the Arm architecture and I’m pretty excited about Graviton and where we are going with it.

Does it support memory tagging?

https://community.arm.com/processors/b/blog/posts/arm-a-profile-architecture-2018-developments-armv85a

Yes, Graviton does support memory tagging. It’s fully compliant with the ARM AArch64 8.0 which allows the top 8 bits of a virtual memory address to be used as a tag.

James, I think you are referring to the top-byte-ignore feature in the base ARMv8 architecture and not the Memory-Tagging introduced in ARMV8.5.

Which ARMv8 extensions does the CPU in Graviton support?

You are right, the “memory tagging” I’m referring to is the ARMv8.0 ignore high byte rather than the latter released ARMv8.5 feature they actually did call memory tagging. ARMV8.5 only came out in the second half of last year — Graviton is an ARMv8.0 compliant implementation.

With all due respect, Amazon and the ARM ecosystem could have gotten a lot further and faster had you supported (with more patience and higher intensity) any of the previous ARM server incarnations that came to market. Without knowing much of the technical details, Graviton sounds like a marginal upgrade over several of the smartNIC products out there including perhaps the the logical follow-on from Annapurna’s NIC.

Fine product/choice, and probably in the right direction for Amazon’s vertical integration ambitions, but doesn’t sound like the same class of ARM processors which you were chastising for not progressing fast enough.

I understand your thinking that we could have offered one of the several Arm-based server chips available in market in past years. I thought pretty much the same myself and, over the years, we have modeled the fully built server costs of every ARM server processor offered in the market. We have run costs models on them all and it’s harder than you might think to produce an interesting price/performance improvement over current server designs. A much less expensive processor still has to be able to produce respectable single threaded performance in order to be interesting to customers and, depending upon the overall server design and mix of memory and processor, the processor itself might be as little as 25% of the overall cost of the server. Achieving a meaningfully better price/performing fully configured server requires that the processor be MUCH faster or MUCH cheaper or some combination of the two.

I’m delighted to see this and far from being unpredictable it’s about time! Could you please say a little more about the impact of the design on future datacentre developments? What timescale has this silicon been developed in? Nvidia say a bit about the development of their chips that is good for young EEs to know. I’m a female former VLSI design Eng in the UK who tries to tell AWS guys here that silicon matters even if I see the move to Serverless & the msg servers don’t matter. Will you make energy savings you wish to disclose?

Are there specific use cases or sectors that you expect to target more with this? So many cloud talks are about applications in web devt or database mgmt but will this advance edge or enable greater shift to cloud by those whom I don’t see at user group meetings?

Glad you like Graviton. The A1 instance type will do best on highly scalable, loosely coupled workloads. We targeted price/performance but, you are right, it’s a good power/performance part as well.

Is AWS Graviton Processor meets the x86 and V100 performances to support high-performance computing workloads as well? will it co-exist or eventually replace them?

The A1 instance type will do best on highly scalable, loosely coupled workloads. For the HPC applications hosted on x86/V100 you were asking about, there will be some where many independent Arm cores are better price/performing and power/performing solutions but it certainly won’t replace them all or even the majority. Looking forward, we should expect to see more and more hardware specialization so the days of a new hardware system completely subsuming all workloads are past.

When will these be available for testing? (all EC2 instances listed are still x86)

The ARM-based EC2 instances are available today. See: https://aws.amazon.com/ec2/instance-types/ and look for “A1”.

/me goes to launch a new EC2 instance… finds that they are all still x86, and the architecture filters still list only x86-32 and x86-64.

Which data center has these processors? Or are they documented but not yet released?

EC2 A1 Instance types (https://aws.amazon.com/ec2/instance-types/a1/) are currently available and ready to start in Northern Virginia, Ohio, and Oregon regions.

This is inaccurate.

” Arm does the processor design, but they license the processor to companies that integrate the design in their silicon rather than actually producing the processor themselves.”

Some companies take the Arm design and enhance it while other companies buy an architectural license and do their own design.

Raspberry Pi as a Service?

Faster than a Pi with more memory than a Raspberry Pi but, yes, it’ll run much of the same software.