Archive For The “Hardware” Category

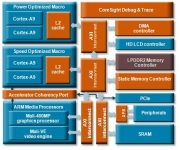

ARM just announced a couple of 2-core SMP design based upon the Cortex-A9 application processor, one optimized for performance and the other for power consumption (http://www.arm.com/news/25922.html). Although the optimization points are different, both are incredibly low power consumers by server standards with the performance-optimized part dissipating only 1.9W at 2Ghz based upon the TSMC 40G…

In The Case for Low-Cost, Low-Power Servers, I made the argument that the right measures of server efficiency was work done per dollar and work done per joule. Purchasing servers on single dimensional metrics like performance or power or even cost alone, makes no sense at all. Single dimensional purchasing leads to micro-optimizations that push…

The server tax is what I call the mark-up applied to servers, enterprise storage, and high scale networking gear. Client equipment is sold in much higher volumes with more competition and, as a consequence, is priced far more competitively. Server gear, even when using many of the same components as client systems, comes at a…

Microsoft announced yesterday that it was planning to bring both Chicago and Dublin online next month. Chicago is initially to be a 30MW critical load facility with a plan to build out to a booming 60MW. 2/3 of the facility is a high scale containerized facility. It’s great to see the world’s second modular data…

I presented the keynote at the International Symposium on Computer Architecture 2009 yesterday. Kathy Yelick kicked off the conference with the other keynote on Monday: How to Waste a Parallel Computer. Thanks to ISCA Program Chair Luiz Borroso for the invitation and for organizing an amazingly successful conference. I’m just sorry I had to leave…

Title: Ten Ways to Waste a Parallel Computer Speaker: Katherine Yelick An excellent keynote talk at ISCA 2009 in Austin this morning. My rough notes follow: · Moore’s law continues o Frequency growth replaced by core count growth · HPC has been working on this for more than a decade but HPC concerned as well…

I like Power Usage Effectiveness as a course measure of data center infrastructure efficiency. It gives us a way of speaking about the efficiency of the data center power distribution and mechanical equipment without having to qualify the discussion on the basis of server and storage used or utilization levels, or other issues not directly…

Two years ago I met with the leaders of the newly formed Dell Data Center Solutions team and they explained they were going to invest deeply in R&D to meet the needs of very high scale data center solutions. Essentially Dell was going to invest in R&D for a fairly narrow market segment. “Yeah, right”…

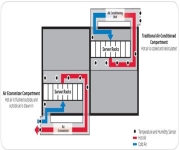

High data center temperatures is the next frontier for server competition (see pages 16 through 22 of my Data Center Efficiency Best Practices talk: http://mvdirona.com/jrh/TalksAndPapers/JamesHamilton_Google2009.pdf and 32C (90F) in the Data Center). At higher temperatures the difference between good and sloppy mechanical designs are much more pronounced and need to be a purchasing criteria. The…

Earlier this week I got a thought provoking comment from Rick Cockrell in response to the posting: 32C (90F) in the Data Center. I found the points raised interesting and worthy of more general discussion so I pulled the thread out from the comments into a separate blog entry. Rick posted: Guys, to be honest…

This IEEE Spectrum article was published in February but I’ve been busy and haven’t had a chance to blog it. The author, Randy Katz, is a UC Berkeley researcher and member of the Reliable Available Distributed Systems Lab. Katz was a coauthor on the recently published RAD Lab article on Cloud Computing: Berkeley Above the…

In Where SSDs Don’t Make Sense in Server Applications, we looked at the results of a HDD to SSD comparison test done by the Microsoft Cambridge Research team. Vijay Rao of AMD recently sent me a pointer to an excellent comparison test done by AnandTech. In SSD versus Enterprise SAS and SATA disks, Anandtech compares…

All new technologies go through an early phase when everyone initially is completely convinced the technology can’t work. Then for those that actually do solve interesting problems, they get adopted in some workloads and head into the next phase. In the next phase, people see the technology actually works well for some workloads and they…

In the talk I gave at the Efficient Data Center Summit, I note that the hottest place on earth over recorded history was Al Aziziyah Libya in 1922 where 136F (58C) was indicated (see Data Center Efficiency Summit (Posting #4)). What’s important about this observation from a data center perspective is that this most extreme…

From Data Center Knowledge yesterday: Rackable Turns up the Heat, we see the beginnings of the next class of server innovations. This one is going to be important and have lasting impact. The industry will save millions of dollars and megawatts of power ignoring the capital expense reductions possible. Hat’s off to Rackable Systems to…

This the third posting in the series on heterogeneous computing. The first two were: 1. Heterogeneous Computing using GPGPUs and FPGAs 2. Heterogeneous Computing using GPGPUs: NVidia GT200 This post looks more deeply at the AMD/ATI RV770. The latest GPU from AMD/ATI is the RV770 architecture. The processor contains 10 SIMD cores, each with 16…