I recently came across a nice data center cooling design by Alan Beresford of EcoCooling Ltd. In this approach, EcoCooling replaces the CRAC units with a combined air mover, damper assembly, and evaporative cooler. I’ve been interested by evaporative coolers and their application to data center cooling for years and they are becoming more common in modern data center deployments (e.g. Data Center Efficiency Summit).

An evaporative cooler is a simple device that cools air through taking water through a state change from fluid to vapor. They are incredibly cheap to run and particularly efficient in locals with lower humidity. Evaporative coolers can allow the power intensive process-based cooling to be shut off for large parts of the year. And, when combined with favorable climates or increased data center temperatures can entirely replace air conditioning systems. See Chillerlesss Datacenter at 95F, for a deeper discussion see Costs of Higher Temperature Data Centers, and for a discussion on server design impacts: Next Point of Server Differentiation: Efficiency at Very High Temperature.

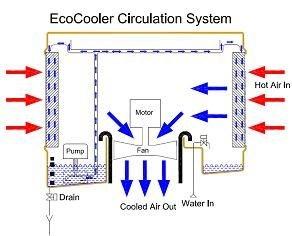

In the EcoCooling solution, they take air from the hot aisle and release it outside the building. Air from outside the building is passed through an evaporative cooler and then delivered to the cold aisle. For days too cold outside for direct delivery to the datacenter, outside air is mixed with exhaust air to achieve the desired inlet temperature.

This is a nice clean approach to substantially reducing air conditioning hours. For more information see: Energy Efficient Data Center Cooling or the EcoCooling web site: http://www.ecocooling.co.uk/.

–jrh

James Hamilton

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

Hello, Mr. James Hamilton.

The physical distance between AZs is described in Invent 2020 – Infrastructure Keynote with Peter DeSantis. A physical distance of many kilometers can avoid the impact of tornadoes and earthquakes. However, it is difficult to evaluate the impact of natural disasters such as earthquakes and tornadoes. The impact of earthquakes of different severities varies. Even a large earthquake affects more than 100 km. It is difficult to avoid the earthquake zone completely in some areas. To ensure that the delay meets the requirements, the distance between the two AZs cannot be too far. Therefore, the selection is contradictory. What do you think about this?

There is tension between different design objectives so, yes, greater distance brings a somewhat increased risk of correlated failures while also bringing a linear increase in latency. The change in latency is linear and the change in risk is exponential so even quite small distances show fairly low risk. Choosing inter-building distances well beyond the knee of this curve produces a very available result. At very small impact in increased latency, considerable safety margin can be designed in.

Patent ” Thermal Management for Electrically Powered Devices” was awarded in December 2021. The art teaches thermal management for Amazon assets such as Data Centers and Vertical Farm, Plenty ( $1B, Softbank, Walmart). May I send a executive summary to you and/or your staff?

It is not an offer for sale implied or not, but for purposes of discovery to cuurent/future challenges that can be addressed.

Sincerely-willett tuitele

For a variety of reasons, I can’t read the patent but feel free to send me a note (james@amazon.com) describing what you have done, how it works, if it’s in production or test, and how you intend to sell it.

I am looking to cool down my small server room. I live in a dry climate except for monsoon season. I am wondering if using evaporative cooling would ruin the electronics due to the increase in humidity in the room? Any advice on this? I would like to go with evaporative cooling.

Evaporative cooling works well and is cost effective in many operating environments. You do need control systems to maintain humidity below 80% relative humidity and preferably less than 70% RH in cold aisle.

i find this amazing.. my father was a heating and ventilation engineer 60yrs ago and he was working on “evaporative cooling” 70 yrs ago. they were called cooling towers and d were bloody wasteful of energy. it should be mandatory that any cooling has to transfer the heat to somewhere that needs heating up… i do believe the heat exchanger is something that may help ..l ol

It’s true that evaporative cooling has been around for a very long time but the combination of air-side economization and evaporative cooling has a far shorter history in data centers.

Hello, James. There are two questions I’d like to talk to you about:

1. Do you think the TDP of x86 CPUs will keep increasing in the future? Can the TDP of a single CPU keep within a certain range and not increase infinitely like the dominant frequency? If the TDP continues to increase, what is the upper limit of the CPU TDP?

2. The CPU TDP increases rapidly, causing some hot spots in servers. To address this issue, the general solution is cold plate liquid cooling or immersive liquid cooling. I do not think the two solutions are the best. Cold plate liquid cooling complicates the mechanical design of data centers, the data center must have both air-cooled and liquid-cooled designs. For immersive liquid cooling, all server components must be soaked in liquid to solve the CPU hot spot problem, which poses high requirements on the production and processing of all components. What do you think of these liquid cooling technologies? Which way do you think is better?

I think CPU power will keep increasing but at a slowing pace. There is much that we can do to add more features to a CPU without adding power (e.g. put the features in different power domains and keep powered off when not in use). But many improvements do increase CPU power. If we know how to cost effectively cool the part and there is value in the increased power, we’ll accept it. In high volume servers, we are nowhere close to the max power dissipation we can cool. For example, in AWS EC2, the Z1 instance type has been clocked higher to deliver better performance even though this has a negative impact on power and, consequently, cooling.

Most processors in hyperscaler deployments are still using passive heat sink cooling so there is a very long way to go before cooling gets difficult. For a small increase in cost, we could move to active pumped liquid systems and be able to handle more than another 100W/socket. TDP can go up quite a bit before it gets either difficult or expensive to manage.

The limiting factor will be economics and the declining value of increasing TDP at any given point in time. For low volume, high performance applications we’ll push TDP hard and be willing to spend more to cool these applications. For the high volume fleet we’ll focus on performance/cost favor lower TDP solutions. But, understanding these different use cases, over time, TDPs have gone up steadily but slowly and we expect they will continue to go up steadily but more slowly.

I expect we’ll eventually end up with immersion cooling used broadly (it is used today in some niche HPC applications) but it’ll be quite a while yet before this is required on high volume severs at hyper scalers. We are nowhere close to current technical mechanical system limits (more than 10 years).

Thank you for your reply!

1. The pump-type liquid cooling system adds a heat exchange when heat is transferred from the CPU to the air. Theoretically, the conversion efficiency decreases. Will this cause the cooling system to require more air supply and the PUE of the data center to increase significantly? Can the pump-type liquid cooling system achieve 400 W/socket?

2. Is it possible for the server to return to the 1 socket design? In this way, when the total power and cooling capacity of a single room in the data center remain unchanged, a single server node has sufficient space and airflow channels to strengthen the pump-type liquid cooling system and prolong the life cycle of the air-cooled mechanical design technology in the data center.

The current liquid cooling technology and industry chain are immature, and cold plate liquid cooling does not solve all problems, which brings great complexity to the design of the long-term infrastructure of data centers.

On your first question, 400 W/socket can be handled by passive heat sinks Active pumped liquid can go far higher.

Could servers return to 1 socket designs. Sure, as core count continues to increase, many predict that single socket servers will be the norm with high socket counts only used in rare cases where very high core counts are needed. Even today, most ARM designs don’t support single system image multi-socket servers. Most ARM servers are single socket today.

I personally like the economics and flexibility of multi-socket designs. These allow very high core counts for workloads that need them but also can be divided up into many individual servers. It’s easy and efficient to allocate memory to the workloads that need it or are paying for it and it’s easier to support memory oversubscription without as many negative performance impacts. Big multi-socket servers have many advantages but there are downsides including more complexity, more inter-workload interference, and higher blast radius on server or hypervisor failure.

Hi James,

Contrary to one socket design,Graviton3 , which puts 3 sorckets into a single server,more like another approach. I am curiousabout the driving force behind that design,and would the X86 platform server will adapt that design too?

The driving force behind the design is sharing infrastructure and lower costs. The gains are material but not game changing. Yes, certainly, the same approach could be used when design an X86 server.

Great article Alan. I think innovations like the ones you outline here are fantastic improvements on the technologies currently used in the industry. I would like to offer a question though: For the large parts of the year (at cooler ambient temperatures) that you mentioned, there is a considerable temperature difference between the IT equipment and the ambient air. Wouldn’t it be interesting if there was a technology that exploited that difference to generate power rather than consume power (air handling and fans)?

Yes Dave, that is the data center equivalent to the “holy grail.” Rather than pay to remove heat, use the energy productively to either generate power or do something else useful. This is how PUEs of less than 1.0 are possible. Ideas with merit range from running heat pumps and attempting to directly harness the energy through to to delivering the low grade heat for economic gain in district heating systems or in support of agriculture. District heating has been deployed in production and is reported to be working well so progress is being made.

Thanks for passing on your experience Alan. You are pushing 2 boundaries where I see big gains possible: 1) required filtration for air-side economization, and 2) the climates where 100% air-side economization with evaporation cooling can be applied.

–jrh

jrh@mvdirona.com

I am finding that we can use the evaporative cooling 100% of the time in Northern Europe. It is all about what the wet bulb temperature of the local air is. A good source of free weather data is www wunderground com

The installation shown has secondary filtration but this is rare for us. The evaporative cooling pads filter out all that is required. Our oldest installation is 3 years old now with this very low level of filtration. I currently do not put this equipment where there are a lot of vehicle exhaust fumes.

Just commissioned a system in Manchester UK for a 500kW data centre and immediately got a PUE of ~1.18 all with conventional equipment.

Larry asked "When you refer to locals with lower humidity..is there a threshold you are referring to?"

I didn’t have any specific threshold in mind. My observation was the more general, Singapore is hard and Phoenix is easy.

–jrh

jrh@mvdirona.com

When you refer to locals with lower humidity..is there a threshold you are referring to?

Exactly as Rick says Gordon. Just use filtration. There is considerable debate in the industry in how aggressive the filtration needs to be. Some argue that they need to keep anything but small micron particles out and change their filters more frequently. Others argue that you only need to keep birds and small animals out of the facility. Either way, changing filters is remarkably cheap.

Rick, if permissible, I would love to visit your facility since you are Seattle area. Let me know if that can be done: jrh@mvdirona.com.

–jrh

jrh@mvdirona.com

We’re using evaporative cooling right here in Seattle to cool our data center when conditions require it. We use outside air approximately 90% of the year and reject most of our hot air outside, recovering some of it to temper the incoming air. Similar to the system described above.

@Gordon – Using filters are sufficient to remove contaminants from the incoming air stream, however if conditions require that we go to cooling tower (like when the generators are running)then the outside air inlet shuts down.

How do you address the contaminant, dust, hydrocarbon issue. Cleaning the air before re-introducing it into the data center.