.jpg)

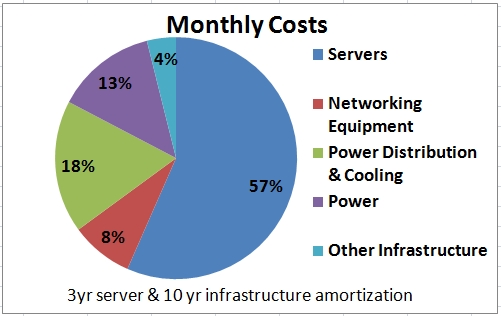

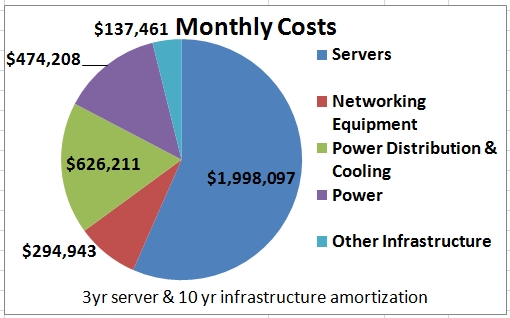

A couple of years ago, I did a detailed look at where the costs are in a modern , high-scale data center. The primary motivation behind bringing all the costs together was to understand where the problems are and find those easiest to address. Predictably, when I first brought these numbers together, a few data points just leapt off the page: 1) at scale, servers dominate overall costs, and 2) mechanical system cost and power consumption seems unreasonably high. Both of these areas have proven to be important technology areas to focus upon and there has been considerable industry-wide innovation particularly in cooling efficiency over the last couple of years.

I posted the original model at the Cost of Power in Large-Scale Data Centers. One of the reasons I posted it was to debunk the often repeated phrase “power is the dominate cost in a large-scale data center”. Servers dominate with mechanical systems and power distribution close behind. It turns out that power is incredibly important but it’s not the utility kWh charge that makes power important. It’s the cost of the power distribution equipment required to consume power and the cost of the mechanical systems that take the heat away once the power is consumed. I referred to this as fully burdened power.

Measured this way, power is the second most important cost. Power efficiency is highly leveraged when looking at overall data center costs, it plays an important role in environmental stewardship, and it is one of the areas where substantial gains continue to look quite attainable. As a consequence, this is where I spend a considerable amount of my time – perhaps the majority – but we have to remember that servers still dominate the overall capital cost.

This last point is a frequent source of confusion. When server and other IT equipment capital costs are directly compared with data center capital costs, the data center portion actually is larger. I’ve frequently heard “how can the facility cost more than the servers in the facility – it just doesn’t make sense.” I don’t know whether or not it makes sense but it actually is not true at this point. I could imagine the infrastructure costs one day eclipsing those of servers as server costs continue to decrease but we’re not there yet. The key point to keep in mind is the amortization periods are completely different. Data center amortization periods run from 10 to 15 years while server amortizations are typically in the three year range. Servers are purchased 3 to 5 times during the life of a datacenter so, when amortized properly, they continue to dominate the cost equation.

In the model below, I normalize all costs to a monthly bill by taking consumable like power and billing them monthly by consumption and taking capital expenses like servers, networking or datacenter infrastructure, and amortizing over their useful lifetime using a 5% cost of money and, again, billing monthly. This approach allows us to compare non-comparable costs such as data center infrastructure with servers and networking gear each with different lifetimes. The model includes all costs “below the operating system” but doesn’t include software licensing costs mostly because open source is dominant in high scale centers and partly because licensing costs very can vary so widely. Administrative costs are not included for the same reason. At scale, hardware administration, security, and other infrastructure-related people costs disappear into the single digits with the very best services down in the 3% range. Because administrative costs vary so greatly, I don’t include them here. On projects with which I’ve been involved, they are insignificantly small so don’t influence my thinking much. I’ve attached the spreadsheet in source form below so you can add in factors such as these if they are more relevant in your environment.

Late last year I updated the model for two reasons: 1) there has been considerable infrastructure innovation over the last couple of years and costs have changed dramatically during that period and 2) because of the importance of networking gear to the cost model, I factor out networking from overall IT costs. We now have IT costs with servers and storage modeled separately from networking. This helps us understand the impact of networking on overall capital cost and on IT power.

When I redo these data, I keep the facility server count in the 45,000 to 50,000 server range. This makes it an reasonable scale facility –big enough to enjoy the benefits of scale but nowhere close to the biggest data centers. Two years ago, 50,000 servers required a 15MW facility (25MW total load). Today, due to increased infrastructure efficiency and reduced individual server power draw, we can support 46k servers in an 8MW facility (12MW total load). The current rate of innovation in our industry is substantially higher than it has been any time in the past with much of this innovation driven by mega service operators.

Keep in mind, I’m only modeling those techniques well understood and reasonably broadly accepted as good quality data center design practices. Most of the big operators will be operating at efficiency levels far beyond those used here. For example, in this model we’re using a Power Usage Efficiency (PUE) of 1.45 but Google, for example, reports PUE across the fleet of under 1.2: Data Center Efficiency Measurements. Again, the spread sheet source is attached below so feel free to change to the PUE used by the model as appropriate.

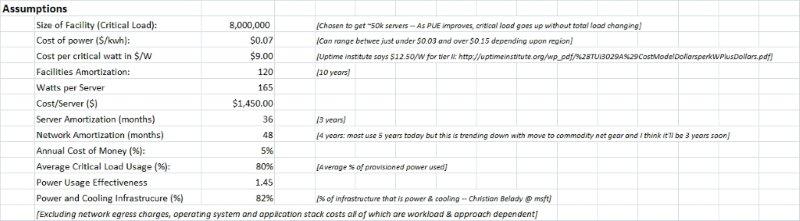

These are the assumptions used by this year’s model:

Using these assumptions we get the following cost structure:

For those of you interested in playing with different assumptions, the spreadsheet source is here: http://mvdirona.com/jrh/TalksAndPapers/PerspectivesDataCenterCostAndPower.xls.

If you choose to use this spreadsheet directly or the data above, please reference the source and include the URL to this pointing.

–jrh

b: http://blog.mvdirona.com / http://perspectives.mvdirona.com

Hello James,

Even a decade later, many insights from this article are still useful.

How do you think the impact of Digital Twin on Data Center TCO analysis?

Whether Digital Twin technology will have a disruptive impact on traditional data center cost analysis, because it connects the physical and economic, which is a more complex and dynamic model.

Digital twins are an excellent way of managing the vast numbers of devices coming under digital management. These workloads are definitely important but they aren’t nearly as resource intensive as other rapidly growing workloads such as database and machine learning. I don’t expect that digital twins will change the current rate of data center resource consumption in a material way.

Hi James –

This is really helpful! I am curious, could you install a solar farm nearby to power the data center with batteries and greatly reduce your cost of power?

Thanks!

With large data centers running in the 30 to 50MW range and some considerably larger, it would require a truly massive battery bank if conventional chemical batteries are used. The largest Li-Ion battery deployment I know of is in the world is the 450 MWhr battery facility in Australia. Even this largest plant in the world would only give 10 hrs of reserve for a big data center. So, yes, it could be done but it would be right up near the top of the largest batteries in the world. There are larger grid scale energy storage systems built on non-battery tech. Without tax advantages or other incentives, it would also be considerably more expensive than undifferentiated power from the grid.

There are many grid scale energy storage solutions being tested world-wide that show potential to be much less expensive than the Li-Ion plant referenced above. Energy storage prices are falling fast so what you suggest will become more practical over time. And, as the world wakes up to the problem we face, I expect tax advantages for renewable may increase as well.

The upsurge of data generation and the increasing need for better data storage facilities to efficiently store huge volumes of data are also fueling the demand for data center power.

James

First of all, great analysis, really impressive

Second, you suggest that servers account for 57% of the Capex.

What is the breakdown between the servers components: CPU, Disks, Memory etc.

We’re doing custom gear with all major components directly sourced from suppliers so I don’t have a good window into the OEM server market BOMs at this point.

Thanks James, great analysis.

what would you estimate for a breakdown of DC capex? does this look right?

Land, core and shell: 20%

Mechanical infrastructure (cooling, etc.): 20%

Power infrastructure: 20%

Servers, data storage, network equipment: 40%

Thanks!

It’s been too long since I was working with these data and the scale we are currently operating at gives some economies of scale in some dimensions that don’t easily apply elsewhere.

Hi James, very interesting study. Do you have any thought on how the cost will vary for small or medium scale distributed datacenters. Considering telecom operators going to establish edge clouds inside their core network, it would interesting to see the significant constituents of opex and capex cost.

There is no question that small facilities are more expensive but it’s far from linear. Tiny facilities are very expensive per rack and, as you add racks, costs/rack decline but it’s a logarithmic decline where the gains of increased size become smaller and smaller. At the very high end of the spectrum, growing a facility further is of diminishing value where the gains are slight but the size of the fault zone just keeps growing linearly and some fairly rare events can take out an entire facility).

Telecom “edge” deployment costs vary on the definition of the edge. To me, the “edge” is a cell tower and cell tower floor space and power is in very tight supply. Most in the industry are really not talking about the absolute edge of the cellular infrastructure network when they are talking about the edge and how 5G can support network function virtualization. They are talking about regional data centers rather than cell towers. Base station controllers, mobile switching centers, and branch office switching centers. These are all further from the edge but space and power is considerably more available in these facilities than it is in cell towers. Even with far more scale and far more economic access to power and space, if NFV was to become super important, these facilities have nowhere close to the needed scale. The law of supply and demand would escalate costs very quickly.

Adding complexity in pricing you can only be close to the subscribers of Telco X in a Telco X facility. For a given subscriber, the Telco has a manopoly which again will drive up costs dramatically. This space has great potential to escalate in costs rapidly. The solution is to put large facilities near to these so they can grow and there aren’t shortages of power and floor space. This is easy to do and they could be quite inexpensive to scale. Let’s call these extension offices.

Extension offices, as defined above is a nice solution to scaling the edge of a telco network. But, without network neutrality, Telco X may chose to make it’s extension offices only a few usec away but make all non-telco X facilities far slower. Or they could decide “for the good of the network” to only connect Telco X branch extension offices directly and all non-Telco X extension offices might only be allowed to connect through the central network. Even network neutrality won’t fully solve this potential issue.

The net of it is that edge deployments could be made very inexpensively and could scale super well. But, for non-technical reasons, supply could be very constrained and from a single provider and prices could be a large multiple of actual costs.

I agree the pricing could be very complicated. Only looking into the technical and economical challenges due to 5G introduction, operators have only 2 choices for future: either build distributed edge clouds in their core network or upgrade their backhaul capacity to cater the demand of futuristic applications. I guess the cost will also impact the network architecture, e.g. various reports are suggesting that telco operators are looking into CRAN (Centralized) and DRAN (Decentralized Radio Access Network) solutions. Also micro services based virtual 5G functions will motivate operators to chose cots hardware.

Considering the DRAN option, if operators already have infrastructure for compute, power and cooling at cell tower or aggregation layer, it won’t be expensive to add compute for 3rd party applications unless it hits the power, cooling and fiber limit. Also having a backup datacenter at a central location (or extension offices as you called) could help in scaling to meet the capacity requirement.

I will really appreciate if you have any reference for cost distribution for small scale datacenters. One cost I see right away will be significant is operations cost (maintenance, security, etc). Now sure how the server power, PUE overhead, networking cost will vary.

The cell towers lack the power and space for a successful compute service nothing that achieves scale will be housed there. Once you are out of the cell towers, space and power problems can be solved and, if the services are successful, they will achieve scale.

I haven’t seen anything specific on the cost disadvantage of small facilities but I suspect these are very big opportunities and the end capacity levels will need to be very large. Essentially, they won’t be “small” facilities.

HI James, it’s been a long time since IBM/Lotus and MS. Doing some research on comparing data center costs to cloud costs and came across this blog entry, which is terrific, and my sense is it holds up pretty well over the years. Do you have a sense of how costs have shifted since you wrote this?

Hey Barry. When I was maintaining the cost model, it was aimed at modelling costs for a high volume operator running on low cost, commodity servers using state of the art design practices but not innovating. Essentially, it models what was possible using the best ideas available at the time but not innovating, doing anything radical, or pushing the state of the art. The server chosen for this model is very low cost commodity server that would support a highly parallel workload like internet search well.

There has been a lot of innovation over the last 8 years so the model is getting a bit out of date and the workload targeted at the time was a highly parallel workload that ran well on fairly weak, inexpensive, commodity servers. Most workloads run on more expensive servers. The cost and efficiency of power distribution hasn’t changed greatly since that time. Mechanical system costs have come down quite a bit and overall data center efficiency has improved substantially since that time. Networking costs have fallen dramatically but the amount of network capacity being deployed has gone up considerably so the relative cost of networking probably hasn’t changed that much.

Thanks James. My sense is that for a general purpose enterprise-class workload $5k/server would be a reasonable baseline (give or take). I’m curious though on your thoughts about the “sticker shock” that some companies claim they are experiencing in the cloud. If you do the math a data center buildout of a three-tier application (web servers, middle tier, load balancers, SAN) comes out to be much more expensive than a more or less identical, non-optimized configuration in IaaS (minus the SAN) — about half in my model — and with RI’s and some smart optimization you can get the cloud costs really quite small.

By the way I’ve been playing with your spreadsheet a bit — trying to come up with a cost/server from a different angle, using a $1200/sqft baseline for the data center (found that somewhere), assuming 25 sqft/rack, etc. Numbers come out similar to yours.

And autofill picked my son’s name for previous comment… sigh …

Normally transitions in server-side computing take a decade. The reason why the cloud transition is happening so quickly is the combination of better economics and the ability to move far faster and to try new ideas more quick, make the cloud a pretty obvious win.

10-13-2017

Acorns, Bond Linc, lenda. Serverless Computing, Elastic Compute Cloud are showing promising innovations!

Could you clarify what goes into server expenses as you define it? Does that reflect the capital expense of purchasing new servers? Or does that reflect the depreciation on the servers?

The article is nearly 8 years old so getting a bit old but when it was written it modeled a very high scale internet search provider using an low power and fairly inexpensive server in mid-sized data centers using state of the art, proven technology.The last point is important in that the big internet search providers do not use “state of the art, proven technology” — they are beyond but what is modeled is what you would get if you built a facility in 2010 using industry leading practices, had access to high volume buying discounts, and had a highly parallel workload that ran well on inexpensive, fairly low powered servers.

The costs modeled include both the cost of operations and the cost of acquisition and models a 3 year life for servers, 5 year life for networking gear, and 10 year life for data center power and cooling infrastructure.

9-18-2017

Hi James

Sense technology seems to be moving toward the size of a pin head will the virtual world become saturated?? If so what next! giving birth to cyborgs while worshiping pagan gods.

Well I know it’s a deep subject!

Your right, technology continues to improve but our appetite for compute continues to increase faster. There have been predictions in the past that the world would only need some finite amount of compute power but, as fast as these predictions get made, they get broken. Consumers appetite for compute in various packages continues to go up. Cell phones alone have really been getting more resources fast and a pretty average phone these days is a 4-core and many are 8-core 64bit systems.

Many applications of compute have nothing directly to do with consumers and scale with declining costs. Data mining and analytic systems have always scaled with cost. If the cost of compute falls as it continues to do, then more tasks make economic sense. IT costs declining drives demand up. Machine learning systems, as an example, are incredibly resource intensive. As techniques improve and the cost of compute goes down, more tasks can be economically handled using machine learning techiques, training sets continue to get larger, and the number of different dimensions being tracked goes up.

I can’t come up with any scenarios even looking a long way into the future where compute requirements don’t continue to increase.

9-17-17

Custom portable solid state TB vs disc-as-needed=lower energy CPU-less constant over heat-less utility dependence

P.S. Your articles are absolute genius.Great enlightenment!

Just how secure is the cloud really?

Many of the advantages of the cloud are driven by scale. When you have millions of servers, is 10s of data centers all of the world you can justify and pay for a very high quality security team. Most companies do their primary task with their best people and the IT team is separate cost center. In a cloud service, the IT problem is the entire problem and customer trust is very dependent upon the security of the solution. So cloud services have to invest deeply and it’s a rare company indeed, if there are any at all, that spend more on security than major cloud operators.

Your question used to be common but, over the last 5+ years or so, security at major cloud operators has largely been acknowledged to be amoungst the best in the world. I’m pretty confident and, for the most part, most customers and perspective customers are there as well. If you are talking to a cloud operator, ask them tough security questions and I suspect you’ll be impressed by the answers. I know at AWS it’s our #1 focus and nothing gets prioritized ahead of security.

9-13-2017

Thanks for the impressive feed back.

Hi James

I just ran across this announcement of HDR building a 1 Giga-Watt facility in Norway. If you take a 400W/node ball part number, it comes down to 2.5 million nodes. Pretty amazing

Best

Matthias

That’s a massive large single failure zone. Nearly an order of magnitude bigger than the previous big ones — it’ll be interesting to see the actual phase 1 build out capacity.

Hi James,

Thanks for the article!!!

Do you see use of HyperConverged systems can bring down Datacenter Capex & Opex ??

Not really. It’s hard to save capex on high margin servers and storage. I suppose if you were previously spending way too much on storage, a converged system might help. On opex, converged systems can increase utilization and utilization is a massively powerful cost lever. It’s hard to beat cloud computing economics these days.

Hi James. Thanks for sharing your thoughts. This is one of my favorite blogs.

I am trying to reconcile the per server power consumption from this post. Would you please clarify?

1) You used 165 W per server. What server utilization does that assume?

2) Does this figure (i.e. 165 W at x% utilization) hold true today at major cloud operators?

Thank you so much.

This goes back quite a few years so the data is getting a bit dated. At the time I did this, I was modelling an internet search and map reduce fleet with light CPU and not much disk. Servers were at the time, on average, more powerful and more expensive. The build costs where what you would get using current best practices. The state of the art has evolved a lot since that time and I’m out of touch with current state of the market pricing since, in the work I’m involved with, it’s all special very high volume pricing.

We are consuming far more power per server today.

Thank you, James. What would be a reasonable average power draw (i.e. x watt at average utilization) to assume for a server deployed by the major cloud operators?

It’s getting hard to give a definitive number. Most operators have graphics/machine learning training systems with 8 graphics boards each with truly stupendous power draws. At the low end, all providers have efficient 2-way x86 servers. Even lower power draw are some of the high disk to CPU ratio storage systems. A few suppliers are experimenting with single socket ARM servers. It’s getting super hard to characterize power draw and few want to talk about it but 12 to 15kva racks are pretty common, storage racks run less, and there are some designs that are much higher.

Thanks for the data, James. How have these costs changed in 2017? Would servers become less of a cost vis-a-vis networking?

It’s messy to answer definitively. Networking costs have fallen dramatically with several of the big operators turning to custom networking equipment but, at the same time, the networking to processor ratio has sky rocketed. For those operators using commercial networking equipment and even for those using custom networking gear more focused on customer experience, networking costs as a ratio to servers have risen somewhat over this period. For those pushing hard on what is possible on networking costs, costs will have fallen.

For the customers you are looking at buying commercial networking gear and servers, I suspect networking cost ratios have stayed roughly the same.

The billing model I like is, predictably based upon where I work, the Amazon pay-for-what-you-use model. When billing for compute usage by the hour, we are very motivated to reduce energy consumption since it direct reduces are costs. The Amazon EC2 charge model is at: http://aws.amazon.com/ec2/

James Hamilton

jrh@mvdirona.com

9-17-2017

Custom portable TB vs mac.disc-as needed-lower energy CPU-less constant over heat- less utility dependence

I’m not following your comment Greg.

Hello James, very interesting article. i have a few questions in regards to an appropriate pricing modell and some technical details that i would like to ask.

we can observe many companies that run data center generate arround 20% of profits on reinvoicing the peak electricity consumption to their clients. therefore datacenter companies aren’t interested in creating green datacenters, optimizing all factors such have energy efficient servers, PDU’s, cooling systems a.s.o. because this will reduce the electricity consumption and as a result reduce the revenue of a datacenter company.

Can you explain me what kind of pricing model should be applied for green datacenters?

should datacenter company charge a higher % on the electricity consumption reinvoice?

should datacenters sell not the entire rack to clients but charge per node or per user and space, as this will be easier to calculate a cost of investment and maintenance?

what is your experience with Intel intelligent power node manager

http://software.intel.com/sites/datacentermanager/faqs.php

Dell and HP have in their product portfolio cloud computing solutions. what is your experience with Dell C6100 and HP Z6000 with 2x170h 6G servers?

In advance I thank you for your help

RP

Thanks for your feedback James.

Our building is located in Toronto, Canada.

This doesn’t include admin staff costs. The challenge here is highly heterogeneous enterprise datacenters with a small number of many different applications can have very high admin costs. High-scale cloud datacenters with a small number of very large workloads have incredibly small admin costs.

Generally, at scale, admin costs round to zero. Even well run medium-sized deployments get under 10% of overall costs and I’ve seen them as low as 3%.

The data here is everything below the O/S so doesn’t include people and doesn’t include software licensing.

What would I do with an incredibly well connected 3MW downtown? The key question is downtown where but, generally, that’s big enough to be broadly interesting. Roughly 300 racks at 10kW a rack. I would likely lease it out. If you have good networking and power, it has value as a datacenter. If its in downtown NY, its incredibly valuable. If its in the San Francisco/Silicon Valley area, it’s less valuable but it’ll still move very quickly.

As always with real estate, location matters. Having good networking and power makes it an ideal datacenter candidate.

–jrh

Hi James,

Excellent post thanks. I am just curious (apologies if you have answered this before, I am new to your blog), but what would be a healthy rule of thumb with regards to network admins salary and/or "people" compensation etc, vs the total costs of running a data center as a percentage (are you suggesting 2% as mentioned to Wayne above?). Also, what about bandwidth costs/security/hardware replacement (or is that bundled in to your assumptions above?)

Pardon me if this is off topic (I do mean that), I am also curious to know what you personally would do with a vacant building in a downtown core location that has ~3MW of utility power and an abundance of diverse bandwidth…?

It varies so greatly depending upon whether its used as a color, platform provider, private data center, etc. Its just really hard to offer general rules on revenue. The reason you havne’t found the revenue model thus far is that its really very application and use specific.

–jrh

jrh@mvdirona.com

Hi James,

First off, excellent article, I found it very insightful. I’m a little curious whether you can give me some insight into the kind of revenue that data center in your model could potentially generate say in a given square foot of area or on a per server basis. I know a lot of factors come into play when talking about revenue but I haven’t come across a generalized model which I think can be quite useful. Any insight will be greatly appreciated!

Yeah, I think your likely correct on that guess Chad. Although some people who say "power is the dominant cost" are still optimizing for space consumption. Go figure :-)

–jrh

James,

When they say that "power is the dominant cost", could it be that folks are somehwat confused and are really pointing at the fact that power is now the bounding resource in the data center (as opposed to, say, space)?

Wayne, in your comment you mentioned that brick and mortar facilities are normally amortized over 27 to 30 years and this could lead to "IT and overall power consumption being a larger portion". That’s considerably longer than I’m used to but let’s take a look at it. As I suspect you know, the challenge is the current rate of change. Trying to use 30 year old datacenter technology is tough and the current rate of change is now much faster than it was even 15 years ago. A 1980 DC isn’t worth much.

If we were to use 30 years, what we would get is along the lines of what you predicted with IT costs going up, datacenter going down but perhaps surprisingly, power is actually down. The 30 year infrastructure amortization shown with 10 year amortization in parenthesis:

1) Servers & storage gear: 65% (57%)

2) Net gear: 9% (8%)

3) power distribution: 10% (18%)

4) power: 15% (13%)

5) Other: 2% (4%)

Using a 30 year amortization has a dramatic impact on overall costs but the change in percentages is less dramatic. The reason why I’ve moved from a 15 year amortization for infrastructure to a 10 year is the current rate of change. Things are improving so fast, I suspect the residual value of a 10+ year old facility won’t be all that high. Thanks

–jrh

James Hamilton, jrh@mvdirona.com

One item that might change these calcs. quite a lot (and make IT and overall power consumption a much large portion) is that the bricks and mortar actually have to be amortized (as required by tax laws) at between 27 and 30 years (not 10 to 15).

Frank, I agree, the outside-the-box infrastructure including wan infrastructure, net gear, wiring closets, and client devices consumes more power than the data centers on which the depend. And, small scale infrastructure like wiring closest are often poorly designed and inefficient.

–jrh

James Hamilton, jrh@mvdirona.com

My apologies, James, for my earlier misrepresentation of your name. Since that was the second time I’ve done this now, I think it’s time I wrote a script ;)

Frank

——

Jonathan, I would equally like to see a fully-burdened ‘infrastrucutre’ cost breakdown, as well, beyond that which takes into account only the fully-burdened power and other areas in your analysis. Here I’m referring to the amortized costs, on an application specific allocation basis, of the base building shell, i.e., the actual floor space built on concrete and steel. This can become especially meaningful over a long stretch of time in lease-rental situations where the dependency on power and air cooling, chillers, etc. demands that specially-designed space enclosures be used.

The subject of real estate is usually treated as a ‘facilities’ based problem, and as such it is not one that IT generally gets involved in justifying, but so too was power treated in this manner at one time. It becomes especially germane in smaller data centers and other small venues, such as LAN equipment closets, comms centers and the smaller server farms, where I devote a great deal of my time and focus on bringing new efficiencies.

The larger subject, that of real estate costs in general, among other things (including how "all"-copper-based LANs in work areas today are taken for granted and considered as being ossified in place), unfortunately exists directly in the middle of many operators’ and IT managers’ blind spots. Yet, when viewed as a function of total "actual" costs to the enterprise, real estate (and power) comprise a large share of ‘fully burdened infrastructure costs’ on a par with big-box data centers, although it’s far more difficult to get an accurate picture and characterize quantitatively all of the millions of smaller enclosures that exist today.

Great post, btw. Thanks.

frank@fttx.org

——

I hear you James and you are right those numbers are quite good. The short answer is: select servers carefully and buy in very large quantity.

Several server manufacturers design and build servers specifically for the mega-facility operators often in response to a specific request for proposals (semi-custom builds). Dell Datacenter Solutions team, Rackable Systems (now SGI), HP, and ZT Systems all build special products for high very large operators. Some of the largest operators cut out the middle man and do private designs and get them directly built by contract manufacturers (CM). Others take a middle ground and work directly with original design manufacturers (ODMs)to have custom designs built and delivered.

At scale, all the above work and will deliver products at these price and power points. The big operators tend not to discuss their equipment in much detail but this paper has a fair amount of detail: http://research.microsoft.com/pubs/131487/kansal_ServerEngineering.pdf

Try using higher numbers like $2,500/server and 250W/server in the spreadsheet. The server costs fraction grows under these assumptions to 61%. Generally, the message stays the same: server cost dominates and power drives the bulk of the remainder.

–jrh

jrh@mvdirona.com

What spec server are you using that comes out to $1450/165 watts?